Researchers at MIT, who last year designed a tiny computer chip tailored to help honeybee-sized drones navigate, have now shrunk their chip design even further, in both size and power consumption.

The team, co-led by Vivienne Sze, associate professor in MIT’s Department of Electrical Engineering and Computer Science (EECS), and Sertac Karaman, the Class of 1948 Career Development Associate Professor of Aeronautics and Astronautics, built a fully customized chip from the ground up, with a focus on reducing power consumption and size while also increasing processing speed.

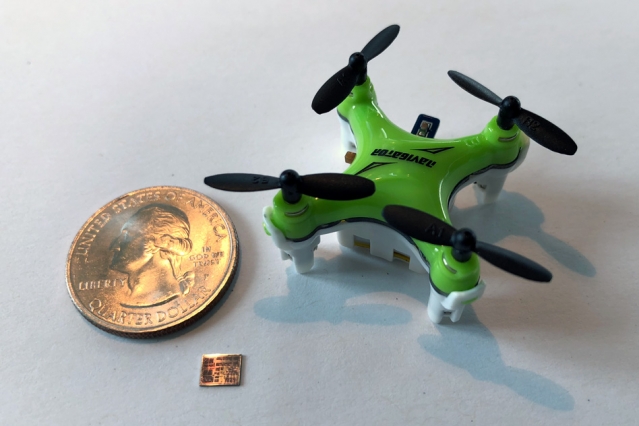

The new computer chip, named “Navion,” which they are presenting this week at the Symposia on VLSI Technology and Circuits, is just 20 square millimeters — about the size of a LEGO minifigure’s footprint — and consumes just 24 milliwatts of power, or about 1 one-thousandth the energy required to power a lightbulb.

Using this tiny amount of power, the chip is able to process in real-time camera images at up to 171 frames per second, as well as inertial measurements, both of which it uses to determine where it is in space. The researchers say the chip can be integrated into “nanodrones” as small as a fingernail, to help the vehicles navigate, particularly in remote or inaccessible places where global positioning satellite data is unavailable.

The chip design can also be run on any small robot or device that needs to navigate over long stretches of time on a limited power supply.

“I can imagine applying this chip to low-energy robotics, like flapping-wing vehicles the size of your fingernail, or lighter-than-air vehicles like weather balloons, that have to go for months on one battery,” says Karaman, who is a member of the Laboratory for Information and Decision Systems and the Institute for Data, Systems, and Society at MIT. “Or imagine medical devices like a little pill you swallow, that can navigate in an intelligent way on very little battery so it doesn’t overheat in your body. The chips we are building can help with all of these.”

Sze and Karaman’s co-authors are EECS graduate student Amr Suleiman, who is the lead author; EECS graduate student Zhengdong Zhang; and Luca Carlone, who was a research scientist during the project and is now an assistant professor in MIT’s Department of Aeronautics and Astronautics.

A new computer chip, smaller than a U.S. dime and shown here with a quarter for scale, helps miniature drones navigate in flight. Image: Courtesy of the researchers

In the past few years, multiple research groups have engineered miniature drones small enough to fit in the palm of your hand. Scientists envision that such tiny vehicles can fly around and snap pictures of your surroundings, like mosquito-sized photographers or surveyors, before landing back in your palm, where they can then be easily stored away.

But a palm-sized drone can only carry so much battery power, most of which is used to make its motors fly, leaving very little energy for other essential operations, such as navigation, and, in particular, state estimation, or a robot’s ability to determine where it is in space.

“In traditional robotics, we take existing off-the-shelf computers and implement [state estimation] algorithms on them, because we don’t usually have to worry about power consumption,” Karaman says. “But in every project that requires us to miniaturize low-power applications, we have to now think about the challenges of programming in a very different way.”

In their previous work, Sze and Karaman began to address such issues by combining algorithms and hardware in a single chip. Their initial design was implemented on a field-programmable gate array, or FPGA, a commercial hardware platform that can be configured to a given application. The chip was able to perform state estimation using 2 watts of power, compared to larger, standard drones that typically require 10 to 30 watts to perform the same tasks. Still, the chip’s power consumption was greater than the total amount of power that miniature drones can typically carry, which researchers estimate to be about 100 milliwatts.

To shrink the chip further, in both size and power consumption, the team decided to build a chip from the ground up rather than reconfigure an existing design. “This gave us a lot more flexibility in the design of the chip,” Sze says.

To reduce the chip’s power consumption, the group came up with a design to minimize the amount of data — in the form of camera images and inertial measurements — that is stored on the chip at any given time. The design also optimizes the way this data flows across the chip.

“Any of the images we would’ve temporarily stored on the chip, we actually compressed so it required less memory,” says Sze, who is a member of the Research Laboratory of Electronics at MIT. The team also cut down on extraneous operations, such as the computation of zeros, which results in a zero. The researchers found a way to skip those computational steps involving any zeros in the data. “This allowed us to avoid having to process and store all those zeros, so we can cut out a lot of unnecessary storage and compute cycles, which reduces the chip size and power, and increases the processing speed of the chip,” Sze says.

Through their design, the team was able to reduce the chip’s memory from its previous 2 megabytes, to about 0.8 megabytes. The team tested the chip on previously collected datasets generated by drones flying through multiple environments, such as office and warehouse-type spaces.

“While we customized the chip for low power and high speed processing, we also made it sufficiently flexible so that it can adapt to these different environments for additional energy savings,” Sze says. “The key is finding the balance between flexibility and efficiency.” The chip can also be reconfigured to support different cameras and inertial measurement unit (IMU) sensors.

From these tests, the researchers found they were able to bring down the chip’s power consumption from 2 watts to 24 milliwatts, and that this was enough to power the chip to process images at 171 frames per second — a rate that was even faster than what the datasets projected.

The team plans to demonstrate its design by implementing its chip on a miniature race car. While a screen displays an onboard camera’s live video, the researchers also hope to show the chip determining where it is in space, in real-time, as well as the amount of power that it uses to perform this task. Eventually, the team plans to test the chip on an actual drone, and ultimately on a miniature drone.

This research was supported, in part, by the Air Force Office of Scientific Research, and by the National Science Foundation.

Source: MIT