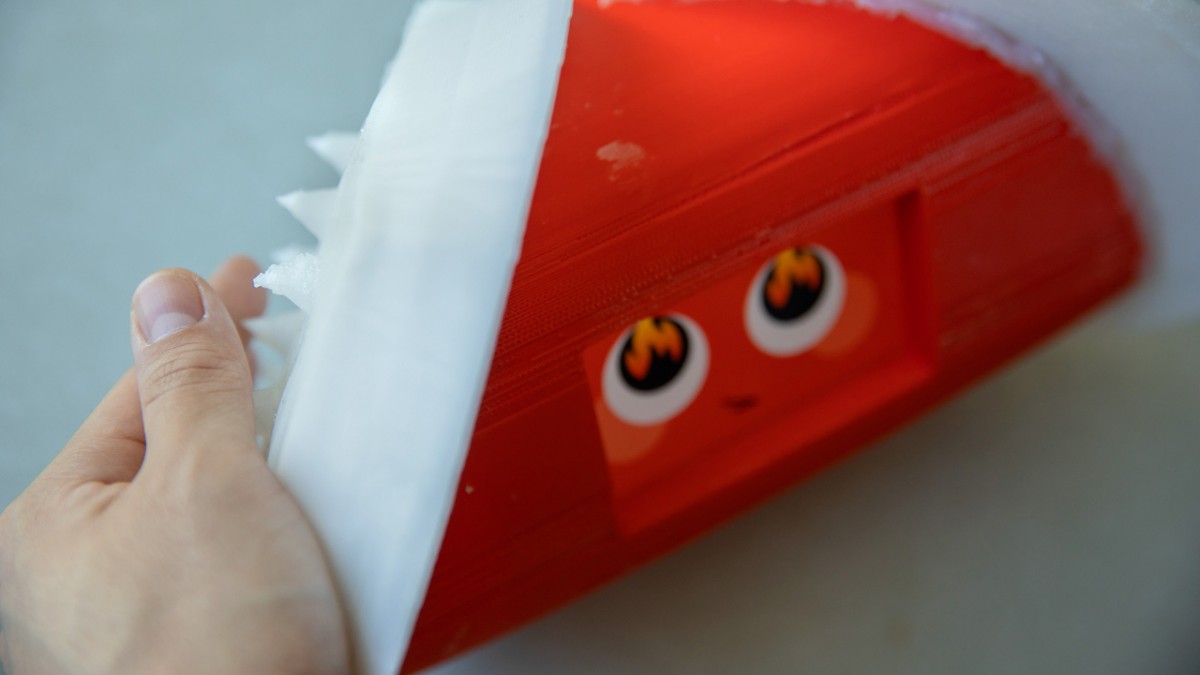

The robot prototype expresses its “anger” with both its eyes and its skin, which turns spiky through fluidic actuators that are inflated under its skin, based on its “mood.”

A new skin could allow robots to express a range of human emotions.

A team from Cornell University has developed a prototype robot that is able to express emotions through changes in its outer surface with a skin that covers a grid of texture units whose shapes change based on how the robot feels.

“I’ve always felt that robots shouldn’t just be modeled after humans or be copies of humans,” Assistant professor of mechanical and aerospace engineering Guy Hoffman said in a statement. “We have a lot of interesting relationships with other species. Robots could be thought of as one of those ‘other species,’ not trying to copy what we do but interacting with us with their own language, tapping into our own instincts.”

The robot features an array of two shapes—goosebumps and spikes that map to different emotional states. The actuation units for both shapes are integrated into texture modules with fluidic chambers connecting bumps of the same kind.

The researchers tried two different control systems with minimizing size and noise level a driving factor for both designs.

“One of the challenges is that a lot of shape-changing technologies are quite loud, due to the pumps involved, and these make them also quite bulky,” Hoffman said.

Some examples of how skin texture expresses emotions including human goosebumps, cats’ neck fur raising, dogs’ back hair, the needles of a porcupine, spiking of a blowfish and a bird’s ruffled feathers.

“Skin change is a particularly useful communication channel since it operates on two channels: It is perceived not only visually, but also haptically, if the human is holding or touching the robot,” the study states. “One of the most important capacities of social robots is to generate nonverbal behaviors to support social interaction. Humans and animals have a wide variety of modalities to express emotional and internal states for interaction , but in robotics these have been studied unequally, with a heavy focus on kinesics, facial expression, gaze, locomotion, and vocalics.”

While the researchers have yet to decide on a future application for the emotional robot, Hoffman said proving they can build one is an admirable first step.

“It’s really just giving us another way to think about how robots could be designed,” he said.

However, scaling up the technology could pose a challenge.

“At the moment, most social robots express [their] internal state only by using facial expressions and gestures,” the paper states. “We believe that the integration of a texture-changing skin, combining both haptic [feel] and visual modalities, can thus significantly enhance the expressive spectrum of robots for social interaction.”

Their work is detailed in a paper, “Soft Skin Texture Modulation for Social Robots,” presented at the International Conference on Soft Robotics in Livorno, Italy.