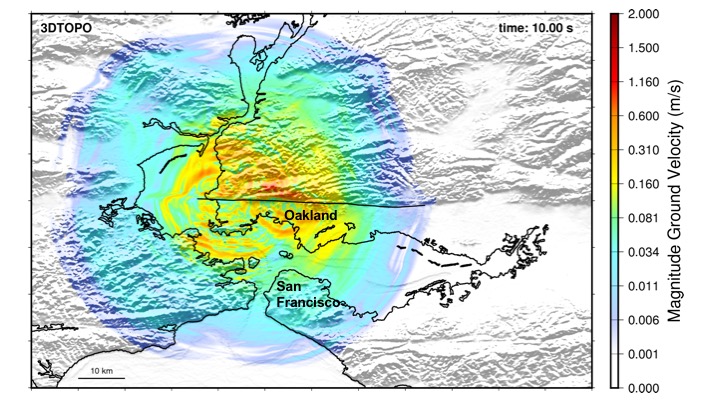

Images resulting from recent simulations at NERSC show the distribution of ground motion intensity across the San Francisco Bay Area region 10 seconds after a large-magnitude earthquake along the Hayward Fault. Credit: Berkeley Lab

Scientists are leveraging new and powerful supercomputers to better assess the risk of earthquakes near some of the country’s most active fault lines.

A joint research team from the U.S. Department of Energy’s (DOE) Lawrence Berkeley National Laboratory and Lawrence Livermore National Laboratory are working as part of the DOE’s Exascale Computing Project to demonstrate how different seismic wave frequencies of ground motion affect structures of different sizes.

David McCallen, a senior scientist in the Earth and Environmental Sciences Area at Berkeley Lab, said in an interview with R&D Magazine that using high performance computers gives researchers a much more accurate and detailed way to assess how likely it is that a major earthquake will occur at given location.

“We are looking for a substantial transformational improvement in our ability to simulate earthquake phenomena as a result of these machines,” he said. “We just haven’t been able to run these types of problems until now because they are just too big. With these exascale machines, it’s really exciting because we will be able to do the kind of modeling with these billions of zones that we would like to do.

“Right now what [earthquake risk assessors] do is use a very empirical based approach, where we look at historical earthquakes from all around the world, we homogenize those and use that to estimate the ground motions that would occur,” he added. “That’s fine but it could only get you so far because it is so site dependent.”

The Exascale Computing Project is a joint project focusing on accelerating the delivery of a capable exascale computing ecosystems with 50 times more computational science and data analytic application power than current systems used by the DOE. The project is expected to launch by 2021.

McCallen said his group is working on writing software that will be used on the exascale computer, while also using the new technology to advance their ability to assess earthquake risk.

Researchers have long been able to simulate lower-frequency ground motion, which can impact larger structures. However, small structures like homes are much more vulnerable to high-frequency shaking, which requires more advanced computing to simulate.

However, the group is able to simulate high-frequency ground shaking of five hertz using the new supercomputer technology, which McCallen said was a significant step.

“We in our latest drawing we’ve been able to resolve a San Francisco Bay Area model to about five hertz, which is major progress,” McCallen said. “We want to understand the variation of ground motion from site to site, from location to location.”

The main benefit of a better understanding of the earthquake risk is that new structures can be built with the risk assessment in mind.

“Over time the use of high performance computing for analyzing and designing infrastructure has really blossomed and has become a core and essential element of doing earthquake design for facilities,” McCallen said. “After decades of observations we know that these ground motions are very complicated. They vary from site to site substantially.”

The researchers found that two buildings with the same number of stories that are 2.4 miles apart and equidistant to the fault line could suffer far different degrees of damage should an earthquake occur. They also found that three-story buildings are less sensitive than 40-story buildings due to the significant increase in long-period ground motion that accompanies large-magnitude earthquakes.

The researchers have already begun looking specifically at the Hayward Fault, a fault line in the East Bay section of the San Francisco Bay Area. The line is considered one of the most dangerous faults in the U.S. because it runs along the most populated subregion of the area.

McCallen said historical data has pointed to a major earthquake along the Hayward Fault every 138 years. However, it may be overdue for an earthquake, as the last major earthquake event occurred almost 150 years ago.

Using this technology communities like those on the Hayward Fault line could also set up better disaster plans.

“If we know where the ground motions are going to be very high, where the trouble spots are going to be, we can develop mitigation and emergency response strategies that reflect that,” McCallen said. “We know where to send resources if we know there are areas that are going to be heavily shaken we better have the ability to get there and do emergency triage.”