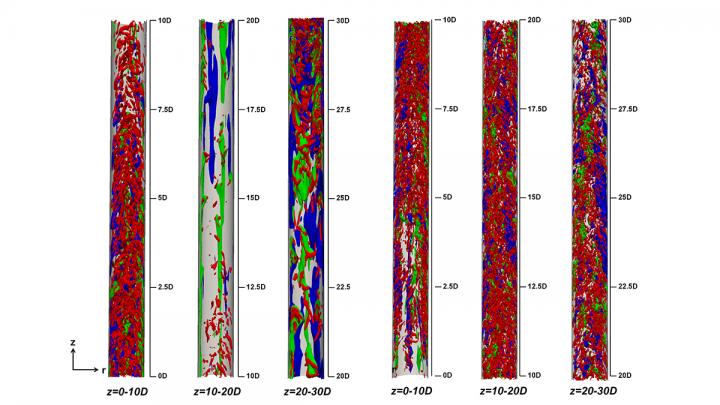

Researchers at IKE and ITLR at the University of Stuttgart are studying supercritical carbon dioxide could replace supercritical water as a working fluid at power plants. This simulation shows the structure and the (red) high and (blue) low speed streaks of the fluid during a cooling process. The researchers observed a major difference in turbulence between downward flowing (left) and upward flowing (right) supercritical carbon dioxide. (Credit: IKE, University of Stuttgart)

In conventional steam power plants, residual water must be separated from power-generating steam. This process limits efficiency, and in early generation power plants, could be volatile, leading to explosions.

In the 1920s, Mark Benson realized that the risk could be reduced and power plants could be more efficient if water and steam could cohabitate. This cohabitation could be achieved by bringing water to a supercritical state, or when a fluid exists as both a liquid and gas at the same time.

While the costs associated with generating the temperature and pressure conditions necessary to achieve supercriticality prevented Benson’s patented Benson Boiler from being widely adopted at power plants, his concepts offered the world its first glimpse at supercritical power generation.

Almost a century later, researchers at the University of Stuttgart’s Institute of Nuclear Technology and Energy Systems (IKE) and Institute of Aerospace Thermodynamics (ITLR) are revisiting Benson’s concepts to explore how it can improve safety and efficiency in modern power plants. Using high-performance computing (HPC), the researchers are developing tools that can make supercritical heat transfer more viable.

“Compared with subcritical power plants, supercritical power plants result in higher thermal efficiency, elimination of several types of equipment, such as any sort of steam dryer, and a more compact layout,” said team member Sandeep Pandey, a PhD candidate at IKE.

Mr. Pandey and Dr. Xu Chu of ITLR are leading the computational aspects of this research, and in conjunction with computer science researchers at the Singapore Institute of Technology (SIT), are employing machine learning techniques informed by high-fidelity simulations on a supercomputer, while also developing a tool that can be easily employed using commercial computers.

In order to make an accurate tool to use commercially, the team needed to run computationally intensive direct numerical simulations (DNS), which is only possible using HPC resources. The High-Performance Computing Center Stuttgart’s (HLRS’s) Hazel Hen supercomputer enabled the high-resolution fluid dynamics simulations they required.

The heat of the moment

While power generation and other industrial procedures use a variety of materials to generate steam or transfer heat, using water is a tried and true method–water is easily accessible, well-understood on a chemical level, and predictable under a wide range of temperature and pressure conditions.

That said, water predictably enters its critical point at 374 degrees Celsius, making supercritical steam generation a sizzling process. Water also needs to be under high pressure–22.4 megapascals, or more than 200 times the pressure coming out of a kitchen sink, in fact. Further, when a material enters its critical state, it exhibits unique properties, and even slight changes to temperature or pressure can have a large impact. For instance, supercritical water does not transfer heat as efficiently as it does in a purely liquid state, and the extreme heat needed to reach supercritical levels can lead to degradation of piping, and, in turn, potentially catastrophic accidents.

Considering some of the difficulties of using water, Pandey and his colleagues are investigating using carbon dioxide (CO2). The common molecule offers a number of advantages, chief among them being that it reaches supercriticality at just over 31 degrees Celsius, making it far more efficient than water. Using carbon dioxide to make power plants cleaner may sound like an oxymoron, but Pandey explained that supercritical CO2 (sCO2) is a far cleaner alternative.

“sCO2 actually has zero ozone depletion potential, and little global warming potential or impact when compared to other common working fluids, such as chlorofluorocarbon-based refrigerants, ammonia, and others,” Pandey said. In addition, sCO2 needs far less space and can be compressed with far less effort than subcritical water. This, in turn, means that it requires a smaller power plant–an sCO2 plant requires ten-fold less hardware for its power cycle than traditional subcritical power cycles.

In order to replace water with carbon dioxide, though, engineers need to thoroughly understand its properties on a fundamental level, including how the fluid’s turbulence–or uneven, unsteady flow–transfers heat, and in turn, interacts with machinery.

When doing computational fluid dynamics simulations related to turbulence, computational scientists largely rely on three methods: Reynolds-Averaged Navier-Stokes (RANS) simulations, large eddy simulations (LES), and direct numerical simulations (DNS). While RANS and LES methods both require researchers to include some assumptions using data coming from experiments or other simulations, DNS methods start with no preconceived notions or input data, allowing them to be far more accurate, but much more computationally expensive.

“LES and RANS models are usually used for simpler fluids,” Pandey said. “We needed a high-fidelity approach for a complex fluid, so we decided to use DNS, hence our need for HPC resources.”

Neural networks for commercial computers

Using the stress and heat transfer data coming from its high-fidelity DNS simulations, the team worked with SIT’s Dr. Wanli Chang to train a deep neural network (DNN), a machine learning algorithm modeled roughly after the biological neural networks, or the network of neurons that recognize and respond to external stimuli.

Traditionally, researchers train machine learning algorithms using experimental data so they can predict heat transfer between fluid and pipe under a variety of conditions. When doing so, however, researchers must be careful not to “overfit” the model; that is, not make the algorithm so accurate with a specific dataset that it does not offer accurate results with other datasets.

Using Hazel Hen, the team ran 35 DNS simulations, each focused on one specific operational condition, and then used the generated dataset to train the DNN. The team uses inlet temperature and pressure, heat flux, pipe diameter, and heat energy of the fluid as inputs, and generates the pipe’s wall temperature and wall sheer stress as output. Eighty percent of the data generated in the DNS simulations is randomly selected to train the DNN, while researchers use the other 20 percent of data for simultaneous, but separate, validation.

This “in situ” validation work is important to avoid overfitting the algorithm, as it restarts the simulation if the algorithm begins showing a divergence between the training and datasets. “Our blind test results show that our DNN is successful in counter-overfitting, and has achieved general acceptability under the operational conditions that we covered in the database,” Pandey said.

After the team felt confident with the agreement, they used the data to start creating a tool for more commercial use. Using the outputs from the team’s recent work as a guide, the team was able to use its DNN to simulate the operational condition’s heat energy with new data in 5.4 milliseconds on a standard laptop computer.

Critical next steps

To date, the team has been using OpenFOAM, a community code, for its DNS simulations. While OpenFOAM is a well-established code for a variety of fluid dynamics simulations, Pandey indicated that the team wanted to use a higher-fidelity code for its simulations. The researchers are working with a team from University of Stuttgart’s Institute of Aerodynamics and Gas Dynamics (IAG) to use its FLEXI code, which offers higher accuracy and can accommodate a wider range of conditions.

Pandey also mentioned he is using a method called implicit LES in addition to the DNS simulations. While implicit LES simulations do not have quite the same high resolution present in the team’s DNS simulations, it does allow the team to run simulations with higher Reynold’s numbers, meaning it can account for a wider range of turbulence conditions.

The team wants to continue to enhance its database in order to further improve its DNN tool. Further, it is collaborating with IKE experimentalists to conduct preliminary experiments and to build a model supercritical power plant in order to test the agreement between experiment and theory. The ultimate prize will be if the team is able to provide an accurate, easy-to-use, and computationally efficient tool that helps engineers and power plant administrators generate power safer and more efficiently.

“Researchers at IKE are working with experiments as well as numerical simulations,” he said. “As part of the numerical team, we are seeking the answers for poor heat transfer. We study the physics behind the fluid flow and turbulence, but our end goal is to develop a simpler model.” sCO2 based technology has the potential to provide a flexible operation which is often desired in renewable energy. Nevertheless, thermos-hydraulic models and knowledge of heat transfer is limited and this study will abridge the technological gap and assist the engineers to build power cycle loop.

“Researchers at IKE are working with both experiments and numerical simulations,” he said. “As part of the numerical team, we are seeking answers for poor heat transfer. We study the complex physics behind fluid flow and turbulence, but the end goal is to develop a simpler model. Conventional power plants help facilitate the use of renewable energy sources by offsetting their intermittent energy generation, but currently aren’t designed to be as flexible as their renewable energy counterparts. If we can implement sCO2 based working fluids, we can improve their flexibility through more compact designs as well as faster start up and shut down times.”