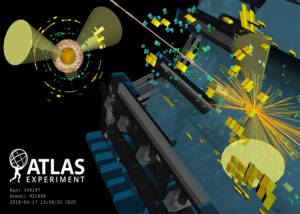

An ATLAS particle collision event display from 2018, showing the spray of particles (orange lines) emanating from the collision of protons, and the detector readout (squares and rectangles). (Credit: ATLAS collaboration)

A team of researchers at Lawrence Berkeley National Laboratory (Berkeley Lab) used a quantum computer to successfully simulate an aspect of particle collisions that is typically neglected in high-energy physics experiments, such as those that occur at CERN’s Large Hadron Collider.

The quantum algorithm they developed accounts for the complexity of parton showers, which are complicated bursts of particles produced in the collisions that involve particle production and decay processes.

Classical algorithms typically used to model parton showers, such as the popular Markov Chain Monte Carlo algorithms, overlook several quantum-based effects, the researchers note in a study published online Feb. 10 in the journal Physical Review Letters that details their quantum algorithm.

“We’ve essentially shown that you can put a parton shower on a quantum computer with efficient resources,” said Christian Bauer, who is Theory Group leader and serves as principal investigator for quantum computing efforts in Berkeley Lab’s Physics Division, “and we’ve shown there are certain quantum effects that are difficult to describe on a classical computer that you could describe on a quantum computer.” Bauer led the recent study.

Their approach meshes quantum and classical computing: It uses the quantum solution only for the part of the particle collisions that cannot be addressed with classical computing and uses classical computing to address all of the other aspects of the particle collisions.

Researchers constructed a so-called “toy model,” a simplified theory that can be run on an actual quantum computer while still containing enough complexity that prevents it from being simulated using classical methods.

“What a quantum algorithm does is compute all possible outcomes at the same time, then picks one,” Bauer said. “As the data gets more and more precise, our theoretical predictions need to get more and more precise. And at some point, these quantum effects become big enough that they actually matter,” and need to be accounted for.

In constructing their quantum algorithm, researchers factored in the different particle processes and outcomes that can occur in a parton shower, accounting for particle state, particle emission history, whether emissions occurred, and the number of particles produced in the shower, including separate counts for bosons and for two types of fermions.

The quantum computer “computed these histories at the same time and summed up all of the possible histories at each intermediate stage,” Bauer noted.

The research team used the IBM Q Johannesburg chip, a quantum computer with 20 qubits. Each qubit, or quantum bit, is capable of representing a zero, one, and a state of so-called superposition in which it represents both a zero and a one simultaneously. This superposition is what makes qubits uniquely powerful compared to standard computing bits, which can represent a zero or one.

Researchers constructed a four-step quantum computer circuit using five qubits, and the algorithm requires 48 operations. Researchers noted that noise in the quantum computer is likely to blame for differences in results with the quantum simulator.

While the team’s pioneering efforts to apply quantum computing to a simplified portion of particle collider data are promising, Bauer said that he doesn’t expect quantum computers to have a large impact on the high-energy physics field for several years – at least until the hardware improves.

Quantum computers will need more qubits and much lower noise to have a real breakthrough, Bauer said. “A lot depends on how quickly the machines get better.” But he noted that there is a huge and growing effort to make that happen, and it’s important to start thinking about these quantum algorithms now to be ready for the coming advances in hardware.

Such quantum leaps in technology are a prime focus of an Energy Department-supported collaborative quantum R&D center that Berkeley Lab is a part of, called the Quantum Systems Accelerator.

As hardware improves it will be possible to account for more types of bosons and fermions in the quantum algorithm, which will improve its accuracy.

Such algorithms should eventually have broad impact in the high-energy physics field, he said, and could also find application in heavy-ion-collider experiments.

Also participating in the study were Benjamin Nachman and Davide Provasoli of the Berkeley Lab Physics Division, and Wibe de Jong of the Berkeley Lab Computational Research Division.

This work was supported by the U.S. Department of Energy Office of Science. It used resources at the Oak Ridge Leadership Computing Facility, which is a DOE Office of Science user facility.

Tell Us What You Think!