Q-CTRL, pioneer of quantum infrastructure software to make quantum technologies useful, announced the appointment of Vince McBeth as principal business development defense and Public Sector. In his new role, McBeth will oversee Q-CTRL’s business development strategy in Washington D.C. and the U.K. as the company deepens its stake in the 2021 AUKUS trilateral security partnership.…

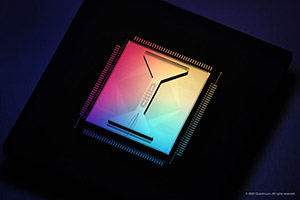

Quantinuum provides RIKEN large-scale hybrid quantum–supercomputing platform

Quantinuum, the world’s largest integrated quantum computing company, and RIKEN, Japan’s largest comprehensive research institution and home to high-performance computing (HPC) center, have announced an agreement in which Quantinuum will provide RIKEN access to its highest-performing H1-Series ion-trap quantum computing technology. Under the agreement, Quantinuum will install the hardware at RIKEN’s campus in Wako, Saitama. The deployment…

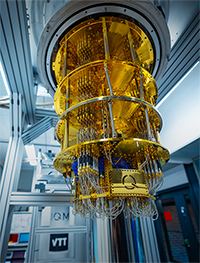

Finland’s 20-qubit quantum computer launch continues its supercomputer development

VTT Technical Research Centre of Finland and a European maker of quantum computers, IQM Quantum Computers, have completed Finland’s second quantum computer. The new 20-qubit quantum computer further strengthens Finland’s position among the countries investing in quantum computing. Finland completed its first quantum computer, a 5-qubit one, in 2021. Finland announced its efforts in quantum computing…

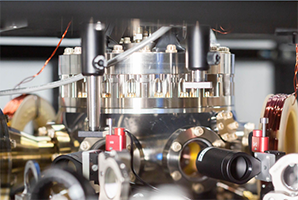

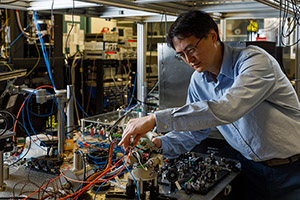

Bigger and better quantum computers possible with new ion trap, dubbed the Enchilada

From Sandia National Laboratory… Sandia National Laboratories has produced its first lot of a new world-class ion trap, a central component for certain quantum computers. The new device, dubbed the Enchilada Trap, enables scientists to build more powerful machines to advance the experimental but potentially revolutionary field of quantum computing. In addition to traps operated…

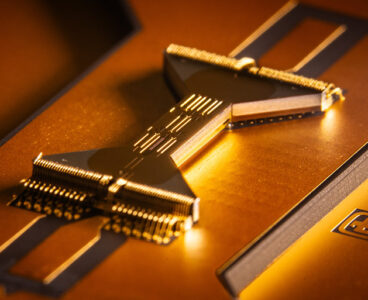

Quantum Chemistry progresses towards a fault-tolerant regime using logical qubits

Quantinuum, the world’s largest quantum computing company, has become the first to simulate a chemical molecule by implementing a fault-tolerant algorithm on a quantum processor using logical qubits. This essential step towards using quantum computers to speed up molecular discovery, with better modeling of chemical systems, reduces the time to generate commercial and economic value.…

Qristal software suite enables using miniature quantum computers in real-world applications

Quantum Brilliance, a developer of room-temperature miniaturized quantum computing products and solutions, announces the launch of its Qristal software suite, enabling R&D teams to integrate quantum systems in real-world applications in a critical step towards the practical utility of the technology. With Qristal, developers and researchers can now develop and test novel quantum algorithms specifically…

Q-CTRL unites AI and quantum technology to boost progress with world-first software

Q-CTRL, a developer of useful quantum technologies through quantum infrastructure software, has announced groundbreaking new AI capabilities in its Boulder Opal software, promising to serve as a “rocket booster” for the emerging industry. The convergence of quantum technology and AI promises a technological revolution on par with the advent of electricity. Despite intense theoretical interest,…

Using the power of symmetry for new quantum technologies

By taking advantage of nature’s own inherent symmetry, researchers at Chalmers University of Technology in Sweden have found a way to control and communicate with the dark state of atoms. This finding opens another door towards building quantum computing networks and quantum sensors to detect the elusive dark matter in the universe. “Nature likes symmetries…

‘Quantum Calculator’ demonstrates quantum computers’ ability to solve optimization problems

Multiverse Computing, a value-based quantum computing solutions company, has released new research that illustrates how today’s quantum computers can be used to do complex mathematical calculations using a new algorithm. The algorithm created by Multiverse is designed to turn a quantum computer into a mathematical tool that can run complex calculations used by scientists daily,…

Sandia, Intel seek novel memory tech to support stockpile mission

From Sandia National Laboratory In pursuit of novel advanced memory technologies that would accelerate simulation and computing applications in support of the nation’s stockpile stewardship mission, Sandia National Laboratories, in partnership with Los Alamos and Lawrence Livermore national labs, has announced a research and development contract awarded to Intel Federal LLC, a wholly owned subsidiary…

PASQAL and Univ. of Chicago unite on neutral atoms quantum computing research

PASQAL, a neutral atoms quantum computing research company headquartered in Paris, announces a collaboration agreement with Professor Hannes Bernien at the University of Chicago. The collaboration aims to advance neutral atom quantum computing. PASQAL and Bernien will accomplish this by developing new techniques for enabling high-fidelity qubit control. Bernien is a professor of molecular engineering at…

Software companies partner to improve quantum algorithm development

Q-CTRL, a quantum control infrastructure software company, and Classiq, a maker of quantum algorithm development software, announce a partnership to provide an end-to-end platform for designing, executing, and analyzing quantum algorithms. The new partnership will integrate Classiq’s Quantum Algorithm Design platform with Q-CTRL’s advanced quantum control techniques designed to boost hardware performance. The collaboration combines the sector’s…

At Sandia Labs, a vision for navigating when GPS goes dark

From Sandia National Laboratory Words like “tough” or “rugged” are rarely associated with a quantum inertial sensor. The remarkable scientific instrument can measure motion a thousand times more accurately than the devices that help navigate today’s missiles, aircraft, and drones. But its delicate, table-sized array of components that includes a complex laser and vacuum system…

Ultrathin device could replace a roomful of equipment

From Sandia National Laboratory: An ultrathin invention could make future computing, sensing and encryption technologies remarkably smaller and more powerful by helping scientists control a strange but useful phenomenon of quantum mechanics, according to new research recently published in the journal Science. Scientists at Sandia National Laboratories and the Max Planck Institute for the Science…

Massachusetts governor awards $3.5M R&D grant for new Northeastern University quantum facility

On Wednesday, September 7, the Baker-Polito Administration visited Northeastern University’s Innovation Campus in Burlington for the announcement of a new $3.5 million grant for the Experiential Quantum Advancement Laboratories (EQUAL), a nearly $10 million project to advance the emerging quantum sensing and related technology sectors in the state. The Northeastern-led project will establish new partnerships…

Finland and Singapore’s National Quantum Office ink MoU to strengthen quantum technology research cooperation

The National Quantum Office of Singapore, VTT Technical Research Centre of Finland, IQM Quantum Computers and CSC – IT Center for Science (Finland) agree to explore and promote research and development collaboration in the areas of quantum technologies. Under the MoU, the parties aim to accelerate the development of quantum technology hardware components, algorithms and…

Tech companies team up to detect defects in manufacturing with quantum computing

Multiverse Computing, a quantum computing solution company, and IKERLAN, a technology transfer company, have released the results of a joint research study that detected defects in manufactured car pieces via image classification by quantum artificial vision systems. The research team developed a quantum-enhanced kernel method for classification on universal gate-based quantum computers as well as…

Multiverse Computing announces quantum digital twin initiative with Bosch Group

Quantum computing company Multiverse Computing announces a collaboration in a research project with the Bosch Automotive Electronics plant in Madrid to use the power of quantum computing in the virtual replica or “digital twin” of a factory. Multiverse is implementing quantum-based optimization algorithms at Bosch, delivering cutting-edge electronics components to several OEMs around the world.…

Frontier supercomputer debuts as world’s fastest, breaking exascale barrier

From Oak Ridge National Laboratory… The Frontier supercomputer at the Department of Energy’s Oak Ridge National Laboratory earned the top ranking today as the world’s fastest on the 59th TOP500 list, with 1.1 exaflops of performance. The system is the first to achieve an unprecedented level of computing performance known as exascale, a threshold of…

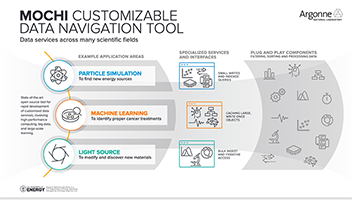

R&D 100 winner of the day: Mochi: Customizable Data Navigation Tool

Mochi, developed by Argonne National Laboratory, is a game-changing open-source tool for rapid development of customized data services supporting high-performance computing (HPC), big data and large-scale learning across many scientific fields. The Mochi framework enables composition of distributed data services from a collection of connectable modules and subservices. These customized data services require relatively small…

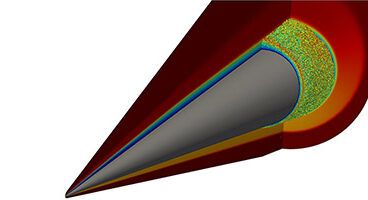

Siemens collaborates with Pasqal to research quantum applications in computer aided engineering, simulation and testing

Pasqal, a neutral atom-based quantum computing company, has announced a multi-year research collaboration with Siemens Digital Industries Software to advance the field of quantum computational multiphysics simulation. Siemens is well known in the field of computer-aided engineering. Pasqal’s proprietary quantum methods to solve complex nonlinear differential equations are expected to enhance the performance of Siemens’…

Q-CTRL and The Paul Scherrer Institute partner to support the scale-up of quantum computers

Q-CTRL, a developer of useful quantum technologies, announces a partnership with The Paul Scherrer Institute (PSI), Switzerland’s largest research institute for natural and engineering sciences, to pioneer R&D in the scale-up of quantum computers. The strategic partnership will leverage Q-CTRL and PSI’s combined expertise to deliver transformational capabilities to the broader research community. This partnership…

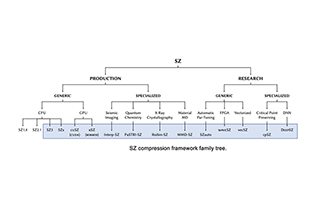

R&D 100 winner of the day: SZ: A Lossy Compression Framework for Scientific Data

Scientific data reduction is a critical problem that must be addressed for the success of exascale supercomputers and next-generation facility-size scientific instruments at a time when they are urgently needed to address important societal problems like climate change, water management, advanced manufacturing and the development of new vaccines and drugs. The SZ compression framework for…

Agnostiq releases benchmark research on quantum computing hardware

Agnostiq, the first-of-its-kind quantum computing SaaS startup, has announced its latest benchmark research which analyzed the state of quantum computing hardware to determine its current and future practicality as a mainstream solution. The findings show that quantum computing hardware has improved over time and that application-specific benchmarks can serve as a more practical yardstick for…

Scientists announce first proof of quantum boomerangs and share backstory of Google’s Time Crystal

Researchers will discuss new experimental milestones in quantum science at the 2022 APS March Meeting, during a press conference at 10 a.m. CDT on Wednesday, March 16, 2022. The conference will be held onsite and streamed via Zoom. A new study in Physical Review X reports experimental evidence for a recently predicted quantum theory. “We…