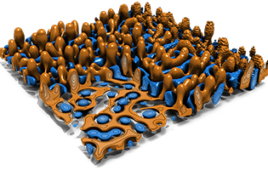

[Image courtesy of Johns Hopkins University]

Researchers say they observed the successful translation of brain signals into computer commands with a brain-computer interface (BCI) implant.

A team at Johns Hopkins surgically implanted the BCI on the brain of an ALS (amyotrophic lateral sclerosis) patient. They published their results, which showed the accurate translation of computer commands from brain activity, in Advanced Science. The team demonstrated this translation over a three-month period without requiring retraining or recalibrating of the BCI algorithm.

Using a BCI called CortiCom, the researchers implanted the device on the surface of brain areas responsible for speech and upper limb function. The subject, a 62-year-old diagnosed with ALS in 2014, has developed severe speech and swallowing problems.

By using the BCI with a special algorithm trained to translate brain signals into computer commands, the subject freely and reliably used a set of six basic commands — up, down, left, right, enter and back. He navigated options on a communication board to control smart devices like room lights and streaming TV applications.

“While past studies on speech BCI have focused on communication, our study addressed the need to control smart devices directly,” said Dr. Nathan Crone, professor of neurology at Johns Hopkins Medicine and senior author of the study. “The BCI accurately recognized a set of 6 commands from neural signals alone, allowing Tim to navigate a communication board and control household devices without needing a language model to fix errors.”

How the BCI works

Dr. William Anderson and Dr. Chad Gordon of Johns Hopkins last year placed two soft plastic sheets of flat electrodes on the surface of the subject’s brain. The sheets — the size of large postage stamps — recorded the electrical signals produced by tens of thousands of brain cells.

The subject and the team trained the BCI to recognize his unique brain signals, repeating each of the six commands. Once trained, the subject issued the same verbal commands to control a communication board in real-time, usually about five minutes every day for three months.

According to Shiyu Luo, graduate student in biomedical engineering at Johns Hopkins and first author of the paper, the subject’s speech was difficult for most to understand, but the BCI accurately translated his activity into computer commands. It even enabled him to express feelings or desires.

Researchers discovered that using signals from both motor and sensory areas of the brain produced the best results. Areas related to the movement of the lips, tongue and jaw had the most influence and stayed consistent over three months.

Unlike many other BCI candidates, the approach used electrodes that don’t penetrate the brain. The researchers say this allows for the recording of a large population of neurons on the brain’s surface, rather than individual cells. Not having to retrain the algorithm could potentially allow participants the freedom to use the BCI whenever nad wherever they want without intervention, too.

“What’s amazing about our study is that the accuracy didn’t change over time, it worked just as well on Day 1 as it did on Day 90,” Crone said. “Our results may be the first steps in realizing the potential for independent home use of speech BCIs by people living with severe paralysis.”

Not having to retrain the BCI algorithm means this approach potentially allows participants the freedom to use the BCI whenever and wherever they want without ongoing researcher intervention says Crone.

What comes next?

The researchers say one limitation was a limited vocabulary used for decoding speech. While the commands proved intuitive and capable of controlling grid-based applications, a more comprehensive vocabulary set could reduce the time needed to perform more tasks.

Crone and his team currently have work underway with the subject to expand the vocabulary capabilities. They also want to work toward translating neural signals into acoustic speech. The team currently has recruitment underway for clinical trials investigating BCI systems for those with movement and communication impairments.

“It’s a very exciting time in the field of brain-computer interfaces,” said Crone. “For those who have lost their ability to communicate due to a variety of neurological conditions, there’s a lot of hope to preserve or regain their ability to communicate with family and friends. But there’s still a lot more work to be done to bring this to all patients who could use this technology.”