Occasionally a star or other celestial object may pass too close to a neighboring black hole, resulting in the object being ripped apart by the black hole’s extreme tidal forces. Black holes are a strange space mystery–an area exhibiting such strong gravitational effects that nothing, not even particles and electromagnetic radiation such as light—can escape from inside it. Dr. P. Chris Fragile, College of Charleston, Department of Physics and Astronomy and other researchers are doing innovative research on tidal disruption events (TDEs) to determine what happens when objects pass too close to black holes. A unique aspect of Fragile’s work at the Charleston College is the participation of undergraduate students in the research.

This work requires simulation on state-of-the-art supercomputers as well as running specialized software code. The team is using supercomputers at the Texas Advanced Computing Center (TACC) located at The University of Texas at Austin as well as the Cosmos++ code in their work.

“In our TDE simulations, we used hundreds of compute cores running simultaneously for two to three days. If we had run these simulations on a single core computer, they would have been running continuously for over two years. The team completed a total of fifteen simulations over about a two month period, which would have required about 30 years of compute time on a single core computer,” states Fragile.

Tidal Disruption Events: Tracking the Breakup of a Star

When celestial objects pass too close to a black hole, the object being disrupted is stretched and compressed in opposing directions during the event. If this occurs with a white dwarf, the dead core of a sun-like star, the compression may be sufficient to briefly reignite nuclear fusion, bringing the white dwarf back to life, if only for a few seconds. For this to happen, the white dwarf must pass relatively close (inside the “tidal radius”) to an intermediate mass black hole (IMBH), one about 1,000 to 10,000 times the mass of the sun.

Tidal disruption events are sometimes capable of producing huge electromagnetic outbursts and potentially detectable gravitational wave signals. While some of the material ripped from the disrupted object will ultimately be swallowed by the black hole, a significant fraction will be flung away into surrounding space as unbound debris. This debris can eventually be assimilated into future generations of stars and planets, so its chemical make-up can have important consequences. The nuclear burning that takes place during the tidal disruption of a white dwarf causes significant changes to its chemical composition, converting the mostly helium, carbon, and oxygen of a typical white dwarf into elements closer to iron or calcium on the periodic table.

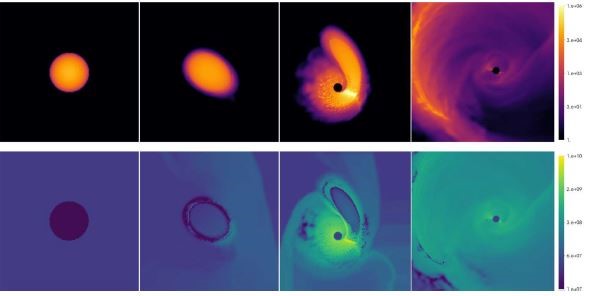

One set of computer simulations confirmed that nuclear burning is a common outcome, with up to 60 percent of the white dwarf’s mass being converted to iron debris in close encounters to black holes. Simulation showed that more distant approaches to black holes produce calcium, and closer approaches produce iron. Figure 1 shows a high resolution three-dimensional simulation of a white dwarf star being tidally disrupted by a 1,000 solar mass black hole. This research will be helpful in characterizing future tidal disruption events and helping learn more about intermediate mass black holes.

Figure 1. Plot of the mass density (top) and temperature (bottom) at four different times from a computer simulation of a white dwarf being tidally disrupted by a 1,000 solar mass black hole. Courtesy of Dr. Chris Fragile.

Cosmos++ Code Simulates Black Hole Research

The Fragile group uses the Cosmos++ computer code to run simulations of black holes. Cosmos code was originally designed to do cosmology but also contains a number of physics programs. Fragile helped develop Cosmos in 2005 while he was a post-doctoral researcher at the Lawrence Livermore National Laboratory (LLNL), along with Steven Murray (LLNL) and Peter Anninos (LLNL). Fragile’s team used Cosmos++ code with adaptive mesh refinements (AMR) and moving mesh in the TDE simulations. Adaptive mesh refinement creates zones around areas where more accuracy and resolution are needed in the simulation.

“We used adaptive mesh zones around the white dwarf star to gain the most accurate simulation of what was occurring with the star. The moving mesh allowed us to use a simulation domain only slightly larger than the white dwarf itself. The domain then moved along with the star and resulting fragments that were released after the star exploded. These adaptations provided the most accurate simulation of the TDE and bursting of the white dwarf star,” said Fragile.

Supercomputers Power Black Hole Research

The Texas Advanced Computing Center (TACC) located at The University of Texas at Austin operates the Stampede2 supercomputer, which is one of the most powerful supercomputers in the U.S. for open science research. Stampede2 is an 18 petaflop system containing 4,200 Intel Xeon Phi nodes, and also incorporates the Intel Xeon Scalable processor and Intel Omni-Path Architecture. Fragile’s group was allocated research space on TACC’s HPC systems by XSEDE, the eXtreme Science and Engineering Discovery Environment, funded by the National Science Foundation.

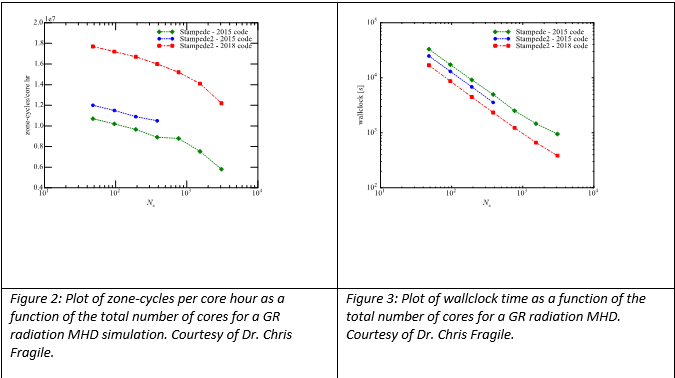

The Fragile research team began using the TACC supercomputers in their work in 2015. Fragile reports that they have measured about a 50 percent improvement in overall performance on a representative test problem run on the Stampede and Stampede2 HPC systems as shown in the following figures.

Fragile attributes the performance increases to code optimization.

“We use Intel MPI for parallelization, running on up to thousands of cores. The code has been shown to scale well up to this limit. It should scale well even beyond this limit, but we haven’t had reason to go to a larger number of cores. The team has used Intel VTune to identify bottlenecks in the code and develop mitigation strategies. We have, so far, achieved about a 50 percent speed up in the execution time of the code.”

Code Optimization Provides Performance Increases

Because supercomputer configurations change every few years, it is sometimes difficult for codes to take full advantage of the features of modern supercomputers. XSEDE’s pool of software experts, through the Extended Collaborative Support Services (ECSS) effort, have been important partners in helping optimize the Cosmos++ code to effectively run on the TACC supercomputers.

Fragile indicates that he is fortunate to be working with Damon McDougall, (a research associate at TACC and also at the Institute for Computational Engineering and Sciences, UT Austin). McDougall is helping optimize Cosmos++ code to take advantage of the Intel Xeon Scalable and Xeon Phi processors on the Stampede2 supercomputer. According to Fragile, “I have been working with Damon McDougall, through ECSS, to try to identify code bottlenecks and develop techniques to alleviate them. We have made progress on memory management and associated overhead costs. We are also trying to develop a hybrid parallelization strategy for my code. The code currently only uses MPI parallelization, but we’d like for it to be able to use MPI along with OpenMP. This effort is still ongoing.”

Challenges for Future Astrophysics Research

There are still a number of challenges for future astrophysics research in areas such as black hole accretion that will require using increasingly powerful supercomputers. According to Fragile, “We would still like to cover orders of magnitude larger spatial domains and orders of magnitude longer time intervals. That’s going to require even larger and faster supercomputers and even better codes.”

Linda Barney is the founder and owner of Barney and Associates, a technical/marketing writing, training and web design firm in Beaverton, OR.

This article was produced as part of Intel’s HPC editorial program, with the goal of highlighting cutting-edge science, research and innovation driven by the HPC community through advanced technology. The publisher of the content has final editing rights and determines what articles are published.