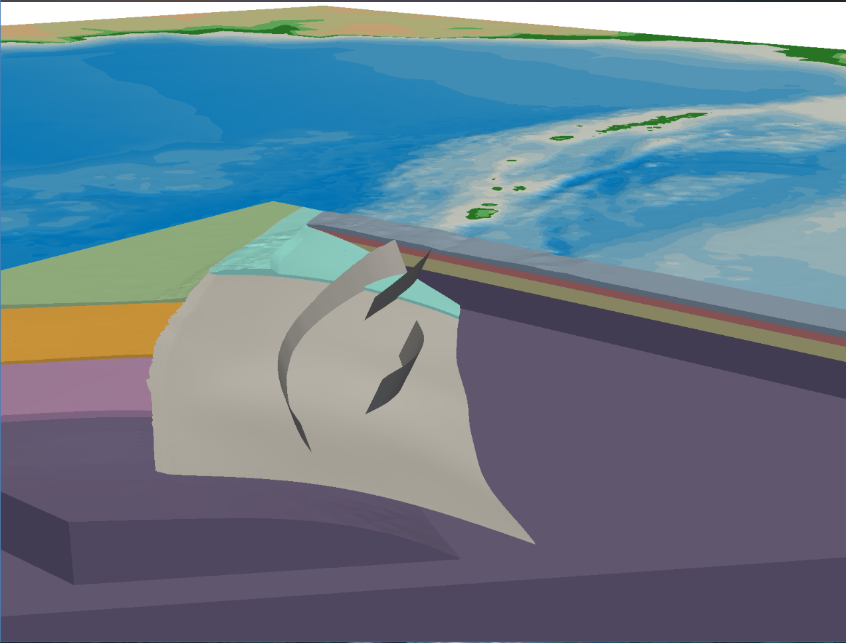

Figure 1. Tectonic plates involved in the Sumatra-Andaman earthquake. Complex 3D geometry of the Sumatra subduction zone model. The curved megathrust is intersecting bathymetry, as are the 3 adjacent splay faults: one forethrust and two backthrusts. The subsurface consists of horizontally layered continental crust and subducting layers of oceanic crust. Each layer is characterized by a different wave speed and thus requires a different mesh resolution. Courtesy of Ludwig-Maximilians-Universität Munich.

The December 2004 Sumatra-Andaman earthquake was one of the most powerful and destructive seismic events in history. Failure of 1300–1500 km of the Sumatra subduction zone caused more than eight minutes of violent shaking. The resulting Mw 9.1–9.3 megathrust earthquake generated a tsunami that was up to 30m high along the northern coast of Sumatra. The Sumatra earthquake ruptured the greatest fault length of any recorded earthquake and triggered a series of tsunamis, killing up to 280,000 people in 14 countries, and even displaced Earth’s North Pole by 2.5 cm. The exact sequence of events involved in the earthquake continues to raise many questions.

Scientists perform extensive research on earthquakes to learn more about them and to help discover a way to predict when earthquakes will occur. However, understanding megathrust earthquakes and the tsunamis they cause is challenging. This is due to the inaccessibility and the complexity of the geological setting, the general uncertainties on how earthquakes operate and the massive amount of data produced by synthetic scenarios.

Researchers from the Ludwig-Maximilians-Universität Munich (LMU) and the Technical University of Munich (TUM) investigated the 2004 Sumatra-Andaman earthquake to help better understand the dynamics of megathrust earthquakes and tsunamis. The team published the results of their research in the Extreme Scale Multi-Physics Simulations of the Tsunamigenic 2004 Sumatra Megathrust Earthquake paper that won the Best Paper award at SC17.

“Incorporating the observed natural complexity of earthquake sources invariably involves the use of numerical methods, highly efficient simulation software, and High Performance Computing (HPC) systems,” said Dr. Alice-Agnes Gabriel, Assistant Professor of Geophysics at LMU Munich and the lead researcher from the LMU side of the team. “Only by exploiting HPC, can these simulation models: 1) resolve dynamic stress drop at the rupture tip concurrently with seafloor displacement over thousands of kilometers; 2) be coupled with geodynamic thermo-mechanical models to provide constraints on fault rheology and the state of stress; and 3) be directly coupled to simulations of tsunamis.”

The work is a collaboration between LMU and TUM domain scientists and computational scientists.

Dr. Michael Bader, Professor of Informatics – Scientific Computing, Technical University of Munich states, “Our seismology partners challenge us with concrete problems and give suggestions on relevant challenges to research. We as computational scientists try our best to perform optimization, vectorization and parallelization techniques on HPC systems to create the simulation models and reduce computation time. Our goal is to create HPC simulation models that are better, run faster and can handle larger amounts of data.”

Anatomy of the 2004 Sumatra-Andaman Earthquake

“Earthquakes happen as rock below Earth’s surface breaks suddenly, often as a result of the slow movement of tectonic plates. If two or more plates collide, one plate will often force the other below it. Regions where this process occurs are called subduction zones and can host very large and shallowly dipping faults—called ‘megathrusts,'” stated Gabriel.

Subduction zones have released most of the seismic energy over the last century and generate the largest earthquakes (moment magnitudes of greater than 9) triggering potentially devastating secondary effects. An example is the nuclear level 7 meltdown caused by the Tsunami following the 2011 Tohoku-Oki event and 210 billion USD economic loss due to the 2011 Tohoku-Oki earthquake. The devastating Sumatra-Andaman earthquake was a megathrust earthquake that created a massive tsunami as the ocean floor rose and displaced large amounts of water.

Studying Subduction Zone Megathrust Earthquakes

Gabriel indicates that in subduction zones, earthquakes occur at regular intervals. However, it is not yet precisely known under what conditions such subduction earthquakes can cause tsunamis, or how big such tsunamis will be. The underlying physics that drive earthquake rupture are poorly understood—we currently do not know:

- how much frictional resistance an earthquake has to overcome across a megathrust

- what role fluid pressuring the rock plays

- if a transfer of energy to smaller faults splaying off the megathrust are crucial for tsunami generation.

Most early-warning systems for tsunamis have a high false-alarm rate: It is not enough to simply track large subduction zone earthquakes to forecast tsunamis.

Petascale Multi-Physics Simulation of the 2004 Sumatra Earthquake

The LMU – TUM simulated scenario represents the first dynamic rupture simulation of the 2004 Sumatra earthquake that considers detailed geometry of the megathrust-splay fault system, 3D layered media, and high-resolution topography. The earthquake scenario matches coseismal slip and horizontal and vertical surface displacements inferred from observations of the Sumatra earthquake. The results of the simulation provide analysis of splay fault activation and information on the time-dependent seafloor initial conditions to help in studying tsunami generation and propagation.

To study the earthquake, the team used a computational grid to divide the simulation into small pieces and compute specific numeric equations to simulate information such as seismic shaking or ocean floor displacement. Bader indicates that using a tetrahedral mesh was essential to represent the shallow angles and shape where the two continental plates meet.

The simulation includes 1500 km of fault length represented at 400m geometrical resolution and 2.2 Hz frequency content of the seismic wave field. While many other large-scale earthquake simulations aim at resolving as high frequencies as possible, the focus of the LMU-TUM study was on understanding fault processes, long-period ground motions (given the lower resonance frequency of tsunamis than those of vulnerable buildings), and the intermediate frequency range in the vicinity of the megathrust potentially transferring energy to activate slip on splay faults. The team performed the scenario simulation on an unstructured tetrahedral mesh with 221 million elements and 111 billion degrees of freedom with order 6 accuracy in space and time.

Supercomputers Used in the Research

The LMU and TUM team created a sophisticated 3D HPC earthquake software called SeisSol and have been improving it during the last six years. The latest version called Shaking Corals—hinting to a coral reef in the Indian Ocean—provided one of the few but precious measurements of ocean floor displacement due to the Sumatra earthquake. The SeisSol earthquake simulation tool is an open source software that allows for the simulation of various aspects of earthquake scenarios and enables researchers to answer geophysical questions for highly complicated scenarios, where there is lack of sufficiently dense data from historic earthquakes. The state-of-the art software is based on a high-order discontinuous Galerkin (i.e., finite-element-type) discretization and provides high-order explicit time stepping to solve the elastic wave equations.

SeisSol breaks down all major numeric calculations to such small matrix multiplications and exploits Intel LIBXSMM, the library for small matrix multiplications, to obtain fast performance on a single core. In addition, the team used Intel VTune to help with profiling system information.

Local Time Stepping Reduces Computing Time

Simulating all the elements of the Sumatra-Andaman earthquake took an enormous amount of computing time.

“To reduce the computation time, the team used a local time stepping (LTS) method which was probably the most complex algorithmic improvement to develop and took two years of time,” states Bader.

In areas where the simulations require much more spatial detail, researchers must slow down the simulation by performing more time steps in these areas. The group developed a clustered LTS scheme, which uses a binning of elements in time clusters, where each cluster’s time step is a multiple of the previous cluster’s time step. Bader indicates that the cluster-based approach sacrifices part of the theoretical speed-up possible for an element-based LTS in favor of the hardware-oriented data structures and efficient load balancing approaches necessary to achieve high performance and scalability up to petascale. The use of LTS reduced the computation time required for the simulations and led to much of the team’s 13-fold speedup.

Optimizing Communication and Processing Time

Due to the increasing amount of data processed by massively parallel applications, writing data to disk either for post-processing, visualization, or to allow restarting the application in case of hardware failures becomes a bottleneck.

Bader states, “We used our library ASYNC to manage overlapping of computation with input/output (I/O) operations. We can either use staging nodes, (additional compute node reserved only for I/O to achieve that overlap), or we can use a dedicated compute core on each node for such I/O. As we already have one compute core set aside in SeisSol to manage message passing interface (MPI) communication for all node-local threads, we use this communication thread also for triggering I/O. For the large Sumatra simulation on SeisSol, this allowed us to write all postprocessing and checkpointing data virtually without overhead in computing time.”

Sumatra Earthquake Performance Results

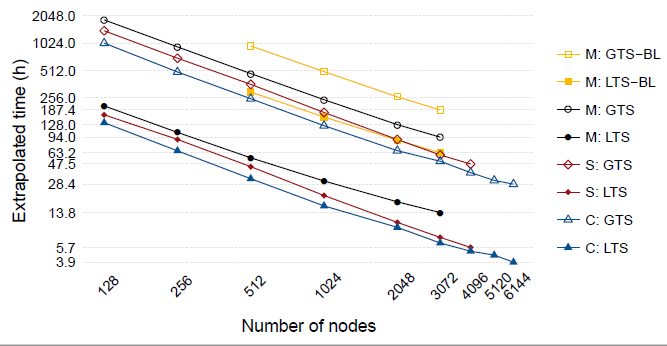

Figure 2 shows the extrapolated time to compute the entire Sumatra scenario on three different supercomputers and compares time to solution for LTS and global time stepping (GTS) for each of the machines. The team worked with researchers at NERSC to run scalability tests to verify optimization of their new SeisSol version (Shaking Corals) for Intel Xeon Phi processors.

“The fact that SeisSol scales beyond 6,000 compute nodes on Cori gives us confidence that SeisSol is well-prepared for the next generation of machines, such as SuperMUC-NG, which will be installed in Munich in 2018,” said Bader.

Figure 2. Performance of Sumatra simulation on three supercomputers. M: SuperMUC Phase 2; S: Shaheen II; C: Cori; BL: Baseline. Courtesy of TUM.

Challenges in Future Earthquake Research

The LMU-TUM research is a major step forward in evaluating megathrust earthquakes and tsunamis. Yet much work remains on using HPC systems and software models to simulate such large-scale disasters. “Future machines will render urgent, real-time supercomputing possible, for example to quantify hazard in aftershock regions,”said Gabriel.

Bader states, “In the future, we need to further improve our models for wave propagation as well as to move from single runs to solve more complex problems. For example, an important simulation model of the future should be able to determine the earthquake’s rupture dynamics when you observe an earthquake.”

Linda Barney is the founder and owner of Barney and Associates, a technical/marketing writing, training and web design firm in Beaverton, OR.