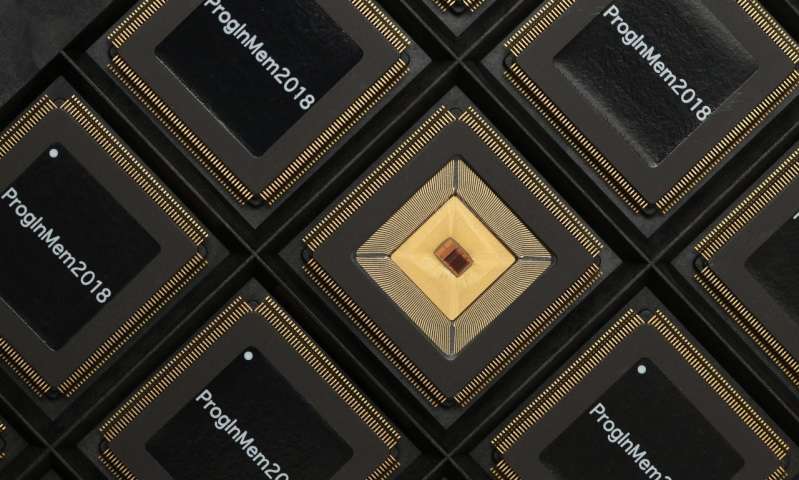

Credit: Princeton University

New computer chips that combine memory with computation have enhanced the performance and reduced the energy needed for artificial intelligence systems.

Princeton University researchers have developed the chip, which works with standard programming languages that could be particularly useful for phones, watches and other devices that rely on high-performance computing but have limited battery life.

The chip is based on a technique called in-memory computing, which performs computation directly in the storage to allow for greater speed and efficiency, clearing a primary computational bottleneck that forces computer processors to expend time and energy retrieving data from stored memory.

The chip, in conjunction with a new system that programs it, builds on previous work where the researchers fabricated the circuitry for in-memory computing and found that the chip could perform tens to hundreds of times faster than comparable chips. However, the initial chip’s capacity was limited because it did not include all the components of the most recent version.

For the new chip, the researchers integrated the in-memory circuitry into a programmable processor architecture to enable the chip to work with common computer languages such as C.

“The previous chip was a strong and powerful engine,” Hongyang Jia, a graduate student, said in a statement. “This chip is the whole car.”

While the new chip can operate on a broad range of systems, it is specifically designed to support deep-learning inference systems such as self-driving vehicles, facial recognition systems and medical diagnostic software.

The chip’s ability to preserve energy is crucial to boost performance because many AI applications are intended to operate on devices driven by batteries like mobile phones or wearable medical sensors.

“The classic computer architecture separates the central processor, which crunches the data, from the memory, which stores the data,” Naveen Verma, an associate professor of electrical engineering, said in a statement. “A lot of the computer’s energy is used in moving data back and forth.”

Memory chips are usually designed as densely as possible so they can pack in a substantial amount of data, while computation requires the space be devoted for additional transistors. The new design allows memory circuits to perform calculations in ways directed by the chip’s central processing unit.

“In-memory computing has been showing a lot of promise in recent years, in really addressing the energy and speed of computing systems,” Verma said. “But the big question has been whether that promise would scale and be usable by system designers towards all of the AI applications we really care about. That makes programmability necessary.”