“Vera Rubin” is Nvidia’s next rack-scale AI system, and Huang says it “arrives at

Wall Street can’t seem to make up its mind about whether the current AI wave is a bubble. Some analysts are firmly in the bubble camp. Bridgewater Associates founder Ray Dalio warned Monday that the AI boom is “now in the early stages of a bubble,” estimating it’s at about 80% of the euphoria that preceded the 1929 crash or the dot-com bust. A recent (November) Bank of America survey found 53% of fund managers already believe AI stocks are in a bubble, with “AI bubble” ranking as the top tail risk for markets.

Others aren’t so sure. Goldman Sachs notes that consensus capex estimates have proven too low for two years running. Analysts predicted 20% growth at the start of both 2024 and 2025, but actual spending exceeded 50% both years. And hyperscalers face what some call a prisoner’s dilemma: pulling back on AI spending virtually guarantees losing the competitive race, even if maintaining the pace risks profitability.

Nvidia CEO Jensen Huang has been blunt about which side he’s on. “There’s been a lot of talk about an AI bubble,” Huang said on Nvidia’s earnings call in November. “From our vantage point, we see something very different.” Last year, the CEO said Nvidia envisions “a three-to-four trillion AI infrastructure opportunity,” adding that the company is “at the very beginning of this buildout.”

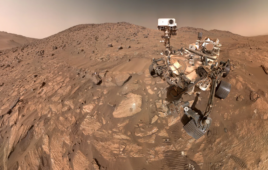

At CES 2026 on Monday, Huang doubled down with hardware. He told the audience that Nvidia’s next platform, Vera Rubin, is already in “full production” and “arrives at exactly the right moment” as AI computing demand is “going through the roof.” Systems are slated for deployment in the second half of 2026.

What Vera Rubin delivers over Blackwell

Rubin is Nvidia’s attempt to make the expensive part of AI cheaper: generating tokens, especially as models shift from quick answers to longer “thinking” traces. The headline numbers are efficiency gains, not just raw speed over Nvidia’s predecessor architecture, Blackwell:

- 10x reduction in inference token cost compared to Blackwell

- 4x fewer GPUs needed to train mixture-of-experts (MoE) models

- 5x improved power efficiency from the new Spectrum-X Ethernet Photonics switches

On raw compute, each Rubin GPU delivers 50 petaflops of NVFP4 inference performance, 5x higher than Blackwell’s GB200, and 35 petaflops for training (3.5x improvement). Each GPU packs 288 GB of HBM4 memory with 22 TB/s of bandwidth, up from Blackwell’s HBM3e.

At rack scale, a full Vera Rubin NVL72 system packs 72 GPUs delivering 3.6 exaflops of inference performance, with 20.7TB of HBM4 capacity. Nvidia claims the NVLink 6 interconnect provides 260TB/s of scale-up bandwidth, or “more bandwidth than the entire internet.”

The architecture also introduces the Vera CPU, built on 88 custom Arm-based “Olympus” cores, designed specifically for agentic AI workloads that require multistep reasoning across long token sequences.

The bigger picture: Picks, shovels, and who’s actually making money

Nvidia’s efficiency claims matter because of who’s footing the bill. A full Blackwell GB200 NVL72 rack runs around $3 million; Vera Rubin systems will likely cost more. Goldman Sachs found that hyperscalers have taken on $121 billion in debt over the past year, a more than 300% increase from typical levels, as NPR noted, to finance data center buildouts.

The math is uncomfortable. AI-related services are expected to deliver only about $25 billion in revenue in 2025, roughly 10% of what hyperscalers are spending on infrastructure, as TradingView noted. Nvidia, meanwhile, posted $51.2 billion in data center revenue in a single quarter. The classic “picks and shovels” dynamic is in full effect: Nvidia sells the tools; most everyone else hopes the gold materializes.

That dynamic is driving hyperscalers to hedge their bets. Google, Amazon, Meta, Microsoft and now OpenAI are all designing custom ASICs, application-specific chips optimized for their own workloads. Google reports that over 75% of its Gemini model computations now run on its internal TPU fleet. Amazon’s Trainium chips fill its biggest AI data center, where Anthropic trains models on half a million Trainium2 chips.

But internal AWS data from April 2024 showed Trainium at just 0.5% of Nvidia GPU usage, as MLQ noted. The gap between announced capability and actual adoption remains wide. Custom ASICs pay off in the long run for companies that can afford them, but designing one starts at tens of millions of dollars, and “of the ASIC players, Google’s the only one that’s really deployed this stuff in huge volumes,” according to Bernstein semiconductor analyst Stacy Rasgon.

Rubin’s 10x token cost reduction is Nvidia’s answer to both the ROI problem and the custom silicon threat: make inference inexpensive enough that the economics work, and fast enough that building your own chip looks like a distraction.

Whether that’s enough depends on which side of the bubble debate you believe.

Tell Us What You Think!

You must be logged in to post a comment.