Written by Kristin Malavenda, Purdue University

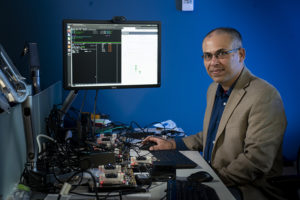

Saurabh Bagchi and his team have developed a method that allows small devices in the Internet of Things to perform analytics on streaming video. (Purdue University photo/Vince Walter)

Purdue University researchers received an Amazon Research Award for their development of a method to perform analytics on streaming video running on small devices linked together in the Internet of Things (IoT). This method can perform computationally expensive tasks, such as object detection and face recognition, in real time and provide guarantees about the accuracy.

Saurabh Bagchi, professor of electrical and computer engineering and computer science (by courtesy) at Purdue University, and his team developed the method in collaboration with researchers from the University of Wisconsin-Madison. The work was recently recognized by Amazon Web Services (AWS) through the Amazon Research Awards (ARA) program, one of 18 awardees in the Applied Machine Learning worldwide competition. The program offers unrestricted funds and AWS promotional credits to support research at academic institutions and nonprofit organizations.

“It had been believed to be impossible to create machine learning models for these demanding tasks that can provide some guarantees, even in the face of uncertainties,” said Bagchi, who also directs the Center for Resilient Infrastructures, Systems and Processes (CRISP). “Hopefully, this provides the community with a path forward, including adoption in many real-world settings where this is a current technology bottleneck.”

The selected team includes Somali Chaterji, assistant professor of agricultural and biological engineering at Purdue, and Yin Li, assistant professor of biostatistics and computer sciences at the University of Wisconsin-Madison.

Our world is increasingly being equipped with a plethora of edge devices that have powerful system-on-chip platforms and high-resolution integrated cameras. Examples include intelligent cameras, smartphones, augmented reality/virtual reality headsets, and industrial and home robots. With recent advances in computer vision and deep learning, there is an impetus to run advanced computer vision functionalities on these devices, such as image classification, object detection and activity recognition.

These developments have ignited an explosion of continuous vision applications, ranging from immersive AR games to interactive photo editing, and from autonomous driving to video surveillance. Many of these applications call for a real-time streaming video analytic system on the edge, due to low latency requirements (keeping up with 30 frames/seconds video) and privacy concerns (keeping the raw video local to the device and sharing only the results of the streaming analytics).

Bagchi’s team developed a method to execute demanding streaming algorithms on such devices, which are typically constrained in terms of their computational, communication and energy capacities. This is the first approach that can provide probabilistic guarantees on accuracy when the characteristics of the content change; for instance, when one part of a video includes a complex scene with many objects and high motion, while another part of the video has a relatively static scene with few objects.

Bratin Saha, vice president of machine learning at Amazon AWS, has been providing the application context for this work.

“The line of work by Saurabh and his collaborators is of interest as it pushes the limit for what can be done with lightweight machine learning techniques,” Saha said. “We are providing both intellectual input and compute resources to help move this work forward quickly, and we’re excited to see how this innovative research can be applied in IoT devices and edge computing.”

Another characteristic of the method is that it can deal with contention on the device. Such contention can happen due to other concurrently running applications. Say, when the video stream is being analyzed, an audio is detected that also needs to be analyzed in real time.

Bagchi and his team further describe how their approximate object classification on edge devices works in a forthcoming article to appear in the ACM Transactions on Sensor Networks.

“In terms of its ability to provide accuracy guarantees, this work points to how computer vision models can be deployed for critical applications on mobile and wearable devices. Our technology might be used to help smart phones and augmented reality glasses to understand streaming videos with high accuracy and great efficiency,” said Li, co-investigator on the project.

As the research team looks to deploy video applications in domains where safety and security are at stake, it is important to be able to reason about guarantees from such applications.

“This work is applicable to a variety of domains — those that demand low inference latencies with formal accuracy guarantees, such as for self-driving cars and drone-based relief and rescue operations,” Chaterji said. “It is also applicable to IoT for digital agriculture applications where on-device computation requires approximation of the neural network architectures. When we started the investigation, even the leading-edge devices and software stack could only support one or two frames per second. Now, we can support all the way up to 33 milliseconds, which is desirable for real-time video processing.”

Tarek Abdelzaher, the Sohaib and Sara Abbasi Professor of Computer Science at the University of Illinois at Urbana-Champaign and a leading researcher in the field of cyberphysical systems, sees the work’s value in making machines more efficient.

“The work is a beautiful example of resource savings by understanding what’s more important and urgent in the scene,” said Abdelzaher, who is not affiliated with this project. “Humans are very good at focusing visual attention on where the action is instead of spending it equally on all elements of a complex scene. They evolved to optimize capacity by instinctively spending cognitive resources where they matter most. This paper is a step toward endowing machines with the same instinct – the innate ability to give different elements of a scene different levels of computational attention, thus significantly improving the trade-off between urgency, quality and resource consumption.”

This research has been supported by the National Science Foundation and the Army Research Lab.

Tell Us What You Think!