Self-driving cars could revolutionize transportation, reducing traffic and accidents, increasing productivity, and providing newfound independence for those unable to drive. But creating a fully automated vehicle is no easy task. It requires an advanced system capable of handling hundreds of actions at one time, such as perceiving distance and detecting objects, identifying lane markings, other cars, and pedestrians, and responding to weather changes, traffic changes and other unexpected situations.

That’s where NVIDIA DRIVE comes in. The scalable artificial intelligence (AI) platform enables automakers, truck makers, tier 1 suppliers, and startups to accelerate production of automated and autonomous vehicles. The architecture is available in a variety of configurations, ranging from a passively cooled mobile processor operating at 10 watts, to a multi-chip configuration with four high performance AI processors which enables Level 5—fully driverless—autonomous driving.

“We have essentially developed what we call an end-to-end platform,” said Danny Shapiro, NVIDIA’s Senior Director of Automotive, in an interview with R&D Magazine. “It goes from the data center to the vehicle. We have systems for training the neural networks, building the AI, and that all happens in the data center. We then have systems for testing and validating the software, all the perception layers and the algorithms, we do that via simulations.”

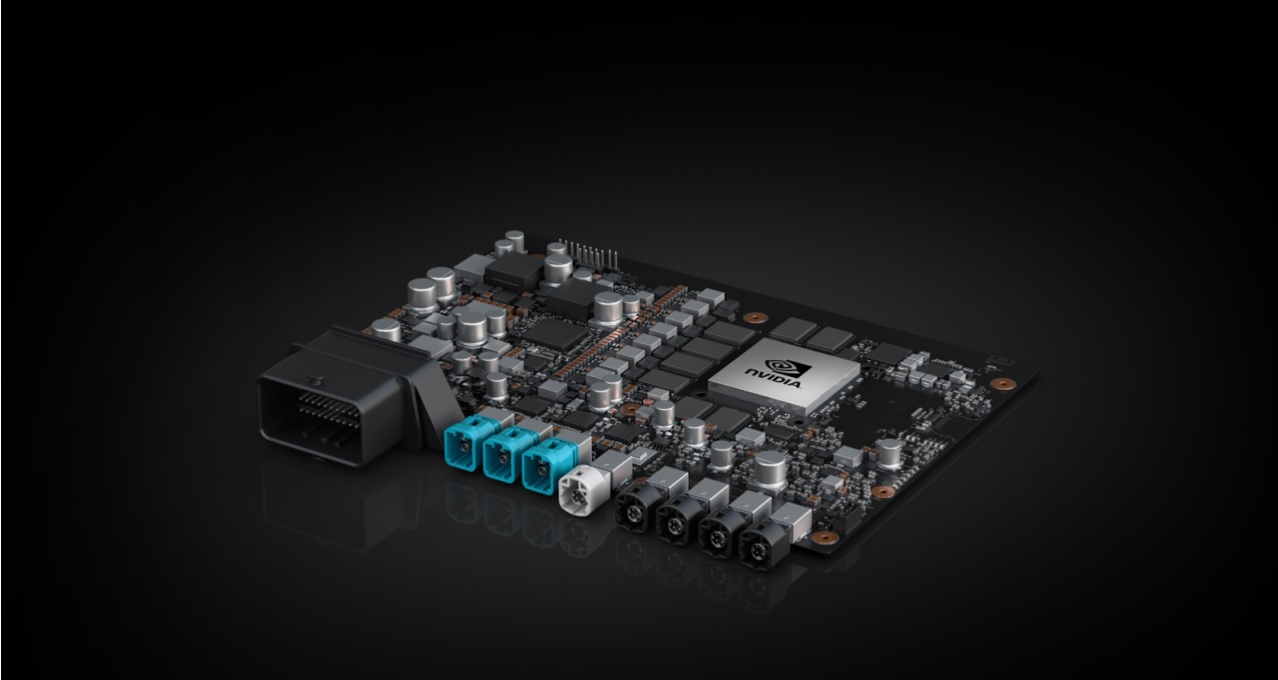

The platform includes Xavier, a complete system-on-chip (SoC), integrating a new graphics processing unit (GPU) architecture called Volta, a custom 8 core CPU architecture, and a new computer vision accelerator. It features 9 billion transistors and a processor that will deliver 30 trillion operations per second (TOPS) of performance, while consuming only 30 watts of power

This technology—designed to serve as the ‘brain’ of self-driving cars—was created by over 2,000 NVIDIA engineers over a four-year period following an investment of $2 billion in R&D. Xavier is the most complex SOC ever created, said Shapiro.

Xavier is also a key part of the NVIDIA DRIVE Pegasus AI computing platform, the world’s first AI car supercomputer designed for fully autonomous Level 5 robotaxis

Pegasus delivers 320 trillion TOPS of deep learning calculations and has the ability to run numerous deep neural networks at the same time.

“Xavier is the single processor and then DRIVE Pegasus is two Xaviers and two of our next-generation GPUs,” said Shapiro. “Depending on the level of autonomous driving needed, there are different levels of computing that will be required and different levels of backup systems.”

How it works

The NVIDIA DRIVE systems fuse data from multiple cameras, as well as LiDAR, radar and ultrasonic sensors. This allows algorithms to accurately understand the full 360-degree environment around the car and produce a robust representation, including static and dynamic objects. Use of deep neural networks for the detection and classification of objects increases the accuracy of the fused sensor data.

The same architecture is used across all the NVIDA DRIVE systems, including Xavier and Pegasus.

“What we’ve done is we’ve created a single architecture. Whether it’s a chip, an SOC or a GPU or data center rack, or an in-vehicle supercomputer, it’s all on the same NVIDIA architecture,” said Shapiro. “This is something that has been a huge advantage to the industry as it allows us to develop on one platform without having to port everything over to put it in the vehicle. Everything from the data center to the car is based on the same unified architecture and has binary compatibility.”

The technology starts in the data center, where data is collected and processed. There the supercomputer is trained in a modeling system using deep learning to recognize pedestrians, signs, emergency vehicles, and everything else a vehicle might encounter on the road. This is done using NVIDIA DGX Systems for deep learning and analytics, which are built on the new NVIDIA Volta GPU platform. The new NVIDIA DGX-2 is the largest GPU ever created, according to Shapiro.

“All that training requires a massive amount of data,” said Shapiro. “We have our DGX series, and our newest one, the DGX-2 is capable of two petaflops; this is a massive AI supercomputer and it comprised of 16 high performance GPUs that are used to train.”

DRIVE Xavier autonomous machine processor. Credit: NVIDIA

Safety testing

After the system is trained, it is tested in a simulation system called NVIDIA DRIVE Constellation.

This is a cloud-based system for testing autonomous vehicles using photorealistic simulation. The computing platform is based on two different servers, the first which runs NVIDIA DRIVE Sim software to simulate a self-driving vehicle’s sensors, such as cameras, LiDAR and radar. The second contains a powerful NVIDIA DRIVE Pegasus AI car computer that runs the complete autonomous vehicle software stack and processes the simulated data as if it were coming from the sensors of a car driving on the road.

“We want to make sure this technology is safe before it goes on the road for testing and certainly before deployment,” said Shapiro. “Essentially what we do is we take the hardware for DRIVE Xavier and Drive Pegasus that would normally go into the vehicle and we put it into a data center rack solution and actually simulate all that sensor data. We can take actual sensor data and run it through or we can generate sensor data and validate all the software running on the actual hardware, but do it in a safe virtual world.”

The system makes it possible to test sceneries that don’t happen frequently in the real world, but that a self-driving car must be able to respond to.

“This lets us really be much more effective than driving these vehicles around because as you are driving you generally don’t see the real challenging situations,” said Shapiro. “You don’t encounter the person running the red light very often. If you want to test the effect of blinding sun at sunset, you get about three minutes a day when you can do that in the real world. In the virtual reality simulator we can test blinding sun on all roads, we don’t have to wait, and we can test blinding sun 24 hours a day. We can test how the car would react to a child running out from in between parked cars, without putting anyone in harm’s way. This type of system will allow us to test millions and billions of miles in VR and be able to ensure that the systems are safe before we put them on the road.”

The systems are designed with a diversity of processor types and algorithms as well as many redundant systems in case of failure. They are designed to constantly monitor themselves and detect any type of system failure.

It is essential to have both diversity and redundancy in a system, said Shapiro.

“If you have a redundant system and its running the exact same code and there is a bug in the code, it’s going to run the same bug twice,” he said. “Where if you have both redundancy and diversity, like an algorithm that is running to detect objects in front of you and a different algorithm that is detecting free space, which is the lack of anything in front of you, the two different techniques give a sort of double check that there is nothing in front of the vehicle.”

TÜV SÜD performed a safety concept assessment of the NVIDIA Xavier SoC and confirmed its safety. The 150-year-old German firm assess compliance to national and international standards for safety, durability and quality in cars, as well as for factories, buildings, bridges and other infrastructure.

Looking ahead

NVIDIA is currently working with 370 different companies, including car companies, truck suppliers, robotaxi companies, sensor companies, and software start-ups that are developing on the NVIDIA DRIVE platform with the intent to bring it to market in the next several years.

Customers at Audi and Tesla already have NVIDIA DRIVE systems with lower levels of autonomy in production and are working to bring more advanced autonomous systems to the market in the next several years. Many have test prototypes on the road.

Daimler and Bosch recently selected NVIDIA DRIVE Pegasus technology to create a fleet of Level 4 and Level 5 autonomy cars—which can fully drive themselves.

Shapiro said he sees a huge growth in the autonomous vehicle industry and a massive amount of investment being made in technology that enables it. This technology could also be applied outside of self-driving cars.

“The technology that we’ve created, our GPUs and our platforms, can be used in many different applications,” he said. “An AI processor can be used in healthcare, and that is a large segment that we are involved in. The same kind of algorithms that you would use to detect a pedestrian in a self-driving car, could potentially be used to identify cancer cells. In energy exploration you could take data sets from sonar or any other seismic work and figure out where there might be oil using this type of technology. Computing is now shifting to AI computing across industries.”