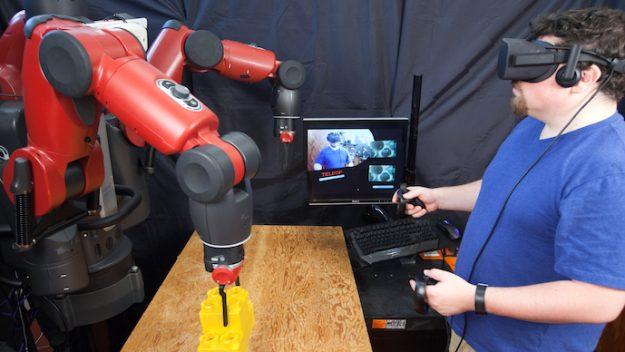

VR system from Computer Science and Artificial Intelligence Laboratory could make it easier for factory workers to telecommute. Credit: Jason Dorfman, MIT CSAIL

While it is inevitable that robots are coming to the assembly line, thanks to virtual reality the supervisor may not even need to be in the same building.

Researcher’s from the Massachusetts Institute of Technology’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have developed a virtual reality system that enables a user to teleoperate a robot using an Oculus Rift headset, which could transform how robots and virtual reality is used in industry.

The system allows a user in a VR control room with multiple sensor displayers to feel like they are inside the robot’s head and match their movements to the robot’s to complete various tasks by using gestures.

“A system like this could eventually help humans supervise robots from a distance,” CSAIL postdoctoral associate Jeffrey Lipton said in a statement. “By teleoperating robots from home, blue-collar workers would be able to tele-commute and benefit from the IT revolution just as white-collars workers do now.”

In the past, researchers have either used a direct model for teleoperation, with VR where the user’s vision is directly coupled to the robot’s state, or the cyber-physical model, where the user interacts with a virtual copy of the robot and the environment.

The CSAIL team combined the two methods to solve the delay issue associated with the direct model, while also solving the need for a similar environment because the user logs into a virtual system inside of the robot’s head.

Using the Oculus controller, a user can interact with controls that appear in the virtual space to open and close the hand grippers to pick up, move and retrieve items.

A user can also plan movements based on the distance between the arm’s location marker and their hand while looking at the live display of the arm.

For the movements to be possible the researchers mapped the human’s space into the virtual space and then mapped the virtual space into the robot space to provide a sense of co-location.

The team successfully controlled the Baxter humanoid robot from Rethink Robots, but believe that the approach would work with other robot platforms as well.

During testing, users grasped objects 95 percent of the time and were 57 percent faster at completing tasks. The teams also were able to pilot the robot from hundreds of miles away.

The next step will be to focus on making the system more scalable for many users with different types of robots that can be compatible with current automation technologies.