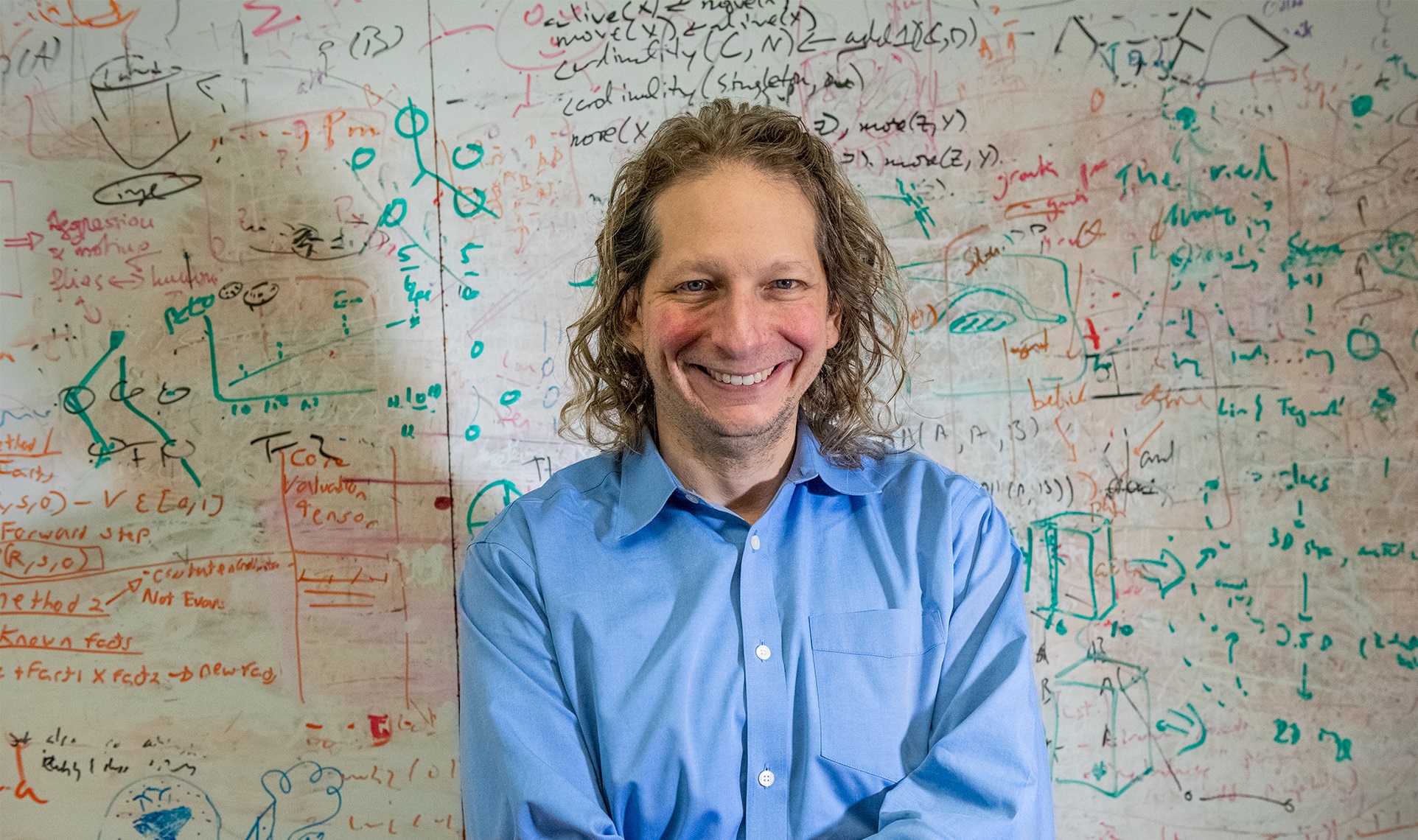

Josh Tenenbaum, PhD. Credit: Kris Brewer, CBMM

These days, artificial intelligence (AI) is everywhere—assisting doctors with complex surgeries in hospitals, allowing cars to drive themselves and giving researchers the ability to comb through large, complex datasets in minutes. AI is certainly revolutionizing a lot of fields. But is it truly intelligent?

Not yet, said Josh Tenenbaum, PhD, a professor of Computational Cognitive Science in the Department of Brain and Cognitive Sciences at the Massachusetts Institute of Technology (MIT).

“I would tend to say AI technologies are useful and they are valuable, but they are not actually intelligent,” said Tenenbaum, who is a member of MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), the Center for Brains, Minds and Machines (CBMM) and the leader of the Computational Cognitive Science lab at MIT. “They don’t really know anything. Each of these systems is built by dedicated human engineers to achieve a human goal,” he added.“But that’s not the same thing as having a goal, making a plan and understanding your place in the world and what you can do to achieve your goal. It’s a very constrained, much more limited version of that problem and that’s what we have right now in AI.”

Tenenbaum—R&D Magazine’s 2018 Innovator of the Year— is working to better understand the mechanisms of human intelligence and then apply that to AI by taking a multidisciplinary, two-fold approach. First, working with neuroscientists and cognitive psychologists, he is studying the origins of intelligence in the human mind and brain, particularly in young children.

“I am trying to understand the science of how human intelligence arises in the mind and brain, how it works, where it comes from—the software and the hardware,” said Tenenbaum. “What it means to me to do that science is to approach it like an engineer. I want to try and describe the computations, and ultimately maybe the machinery of the mind and the brain.”

Then, working with computer scientists and engineers, his goal is to apply that knowledge to build more human-like intelligence in machines.

“This is a proof of concept, if you actually build some kind of machine or some kind of algorithm or a computer system that is more intelligent in some human-like way, even if it’s just a small step in that direction, that is where the AI innovation comes from,” he said.

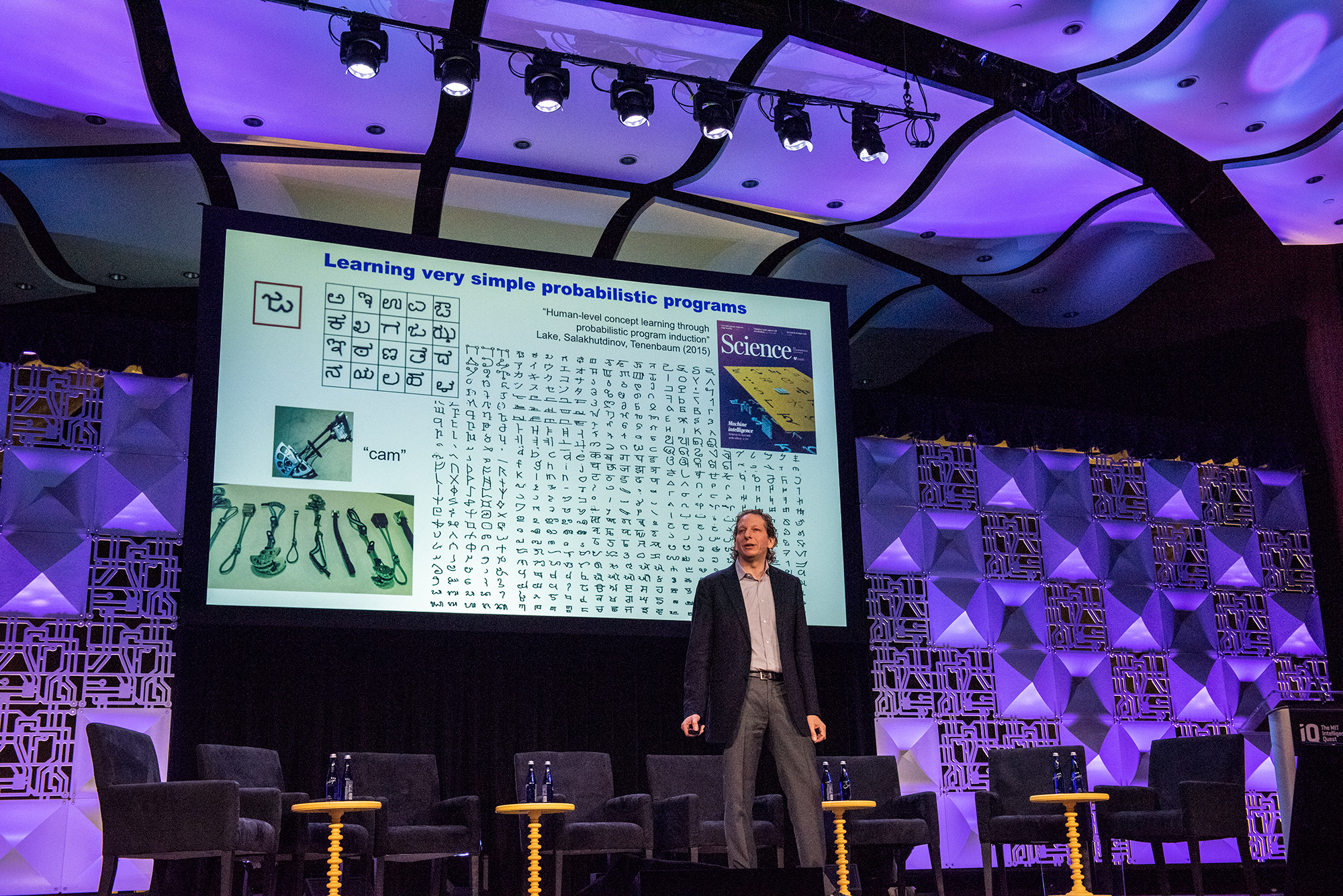

Tenenbaum’s scientific work currently focuses on two areas: describing the structure, content and development of people’s common sense theories, especially intuitive physics and intuitive psychology, and understanding how people are able to learn and generalize new concepts, models, theories and tasks from very few examples, a concept known as “one-shot learning.” He and his team have been able to recreate “one-shot learning” artificially, designing computer programs capable of learning to recognize new handwritten characters, as well as certain objects in images, after seeing just a few examples. This ability could allow future machine-learning programs to work much more efficiently, learning quickly from minimal examples instead of requiring huge quantities of training data.

Finding his inspiration

Cognitive science and AI were both topics that Tenenbaum was exposed to at an early age. Growing up, his father worked as an engineer and AI researcher in California. His mother was a teacher who went on to get a PhD in education to pursue her interest in understanding how children learn.

“I grew up being interested in learning and how you learn and teach, and also really being influenced by AI; I met a lot of early AI researchers through my Dad,” he said.

Despite these early influences, Tenenbaum didn’t immediately follow in his parents’ footsteps. When he began his undergraduate degree at Yale University, he decided to study physics. His focus started to shift after meeting several mentors, including a psychologist named Roger Shepard, PhD, who worked at Stanford University.

“Roger Shepard is one of the greatest psychologists of the twentieth century,” said Tenenbaum. “I was able to work with him in the summer to do mathematics and computational thinking to study the mind and brain. I started working on what I am doing now with him as an undergraduate and there is a pretty continuous path between that work and what I do now.”

With that inspiration, Tenenbaum went on to earn his PhD in Brain and Cognitive Sciences in 1999 from MIT.

Still, it took time before he began connecting the work he was doing to the creation of human-like intelligence in AI.

“I didn’t think of myself when I was going to grad school as anything like an AI researcher,” he said. “The idea was a big one, but it wasn’t anything like the phenomena that it is now with the level of resources it has. I would take a computer science class or two, but I was really thinking about wanting to build models of the mind and create theories of human intelligence.”

Eventually, his career shifted into a subfield of cognitive science known as computational cognitive science—the idea of using the tools and language of computer science and AI to think about the human mind.

Children’s minds as models

It requires “baby steps,” to better understand the human mind, said Tenenbaum.

“What is currently most exciting to me, and what has driven a lot of my work recently, is thinking a lot about human children. If you like, we are taking baby steps starting from actual babies,” said Tenenbaum. “You could argue that the oldest dream of AI is this one—the idea that you could build a machine that grows into intelligence the way a person does, that starts as a baby and learns like a child.”

This concept is not new, said Tenenbaum. Computer science-pioneer Alan Turing proposed the idea years ago, asserting that the only way to develop human-like intelligence in a machine was to build something that started off like a child because it was presumably simpler, and then teach it in a similar manner as a child is taught.

“That sounds great but it hasn’t really worked until now and we are still far from making it work because of one key thing—children’s brains and minds turned out to not be as simple as Turing thought,” said Tenenbaum.

Josh Tenenbaum at the MIT Quest for Intelligence launch in March 2018. Credit: Kris Brewer, CBMM

There is currently some understanding of how babies learn, said Tenenbaum, but most of this comes from the study of cognitive development and is not in the form of math models or engineering.

“That’s my job, and my colleagues, to really work on that,” he said.

His team has made some strides in this area, particularly in the study of what he refers to as “common sense.

A three month-old child doesn’t look out into the world and see patterns and pixels like a machine-learning algorithm, explained Tenenbaum. Instead they see things, objects and people and understand that these are what make up the world.

“That in some form seems to be built into the brain and we’ve made some progress using probabilistic programming tools to capture that basic common sense understanding of persons, places, and things in machine form,” he said. “We’ve used that to build quantitative models of how babies see and think so that we can measure it and also, in robotics, in computer vision, to give those systems some part of the very basic common sense awareness of the world around them that we think babies have.”

Children also have the ability to learn new concepts extremely quickly with little context, something that machines cannot currently do, said Tenenbaum.

“It is often noted that a system like machine vision if you train it to recognize a cat, or a dog, or a certain kind of car—you can teach it to recognize anything if you give it enough examples,” he said. “But often you have to give it hundreds of even thousands of examples before it starts to have any generalizable concept of that object, enough it can pick out new images. The challenge for machine learning has been how we can build generalizable concept learning systems that don’t require all of that label training data, having hundreds or thousands of examples.”

A child on the other hand can, at a very young age, identify and classify something based on very few examples, and sometimes—with the right context—they can recognize something with just one example, said Tenenbaum. This is a concept known as “one-shot learning.”

“For example, a child who knows about animals already you can show them one kind of new animal at the zoo and that’s all they need to get that the new thing is an animal,” said Tenenbaum. “The ability to understand how things work from very little data is the hallmark of human intelligence. We’ve been working for a while and have some accomplishments in building machine systems that can do what we call one-shot learning.”

Josh Tenenbaum (first row, far left) with colleagues from the Curious Minded Machine program, a new interdisciplinary collaboration designed to imbue robots with human-like curiosity led by Honda Research Institute. Credit: Honda Research Institute.

To achieve these milestones, Tenenbaum works with colleagues that specialize in fields across the board.

He is a leader in a new MIT initiative known as the MIT Quest for Intelligence. The initiative aims to understand how human intelligence works in engineering terms and utilize that knowledge to build wiser and more useful machines for the benefit of society. In addition to utilizing the more than 200 MIT investigators already working directly on the science and engineering of intelligence, the Quest has also forged a number of collaborative projects with industry, such as the MIT–IBM Watson AI Lab, a collaborative industrial-academic laboratory focused on advancing fundamental AI research.

Tenenbaum and several of his colleagues from MIT CSAIL are also part of a research collaboration called the Curious Minded Machine initiated by the Honda Research Institute. This initiatives seeks to develop intelligent systems capable of learning continuously with a human-like sense of curiosity. The MIT team is focused on the notion that any intelligent agent must have a theory of the world that can be used to infer a causal theory of sensor percepts in order to be able to predict future percepts and assorted effects of future actions.

In addition to his work with AI researchers, Tenenbaum also spends a significant amount of his time working with cognitive scientists who are designing experiments to better understand the brain and mind, such as Laura Schulz, PhD, and Rebecca Saxe, PhD, both professors of cognitive science in the Brain and Cognitive Sciences department at MIT.

“During my typical workday I could easily be spending it looking at data from children or from babies with those colleagues thinking about what experiments to do, or I could be working with other colleagues in robotics, or computer vision, or the AI lab at CSAIL, where we are building algorithms inspired by that science to try and create useable technologies. Actively participating in both of those worlds and being a bridge between them, I think that is key to what I am trying to do.”

Creating human-like intelligence

Not everyone understands the purpose or methodology of Tenenbaum’s research.

“People often say to me, ‘we have children, do we need machine children?’” he said. “The goal is not to produce machine children. To me, the number one goal is the basic science goal—to actually understand how the mind works. But the goal on the technology side is not to build a digital child or a robot baby or something, but rather to give machines the ability to be intelligent in a human world.

This ability could pave the way for AI that interfaces with humans in the same way that humans interact with each other. AI and machines that are truly valuable to society should be able to act as partners to humans, and easily integrate in human lifestyles, even if they possess far from actual human abilities, said Tenenbaum.

“The goal isn’t to build machine humans, it is to build machines that have the kind of intelligence to live safely and usefully with us in a human world,” he said. “Following Turing, we think the best route arguably to do this— and the only route we know actually works— is to build that form of intelligence the way it’s built in humans, to start with something built in and then allow it to have experiences where it can grow and learn.”