By Jesse Harris, Digital Marketing Coordinator, ACDLabs

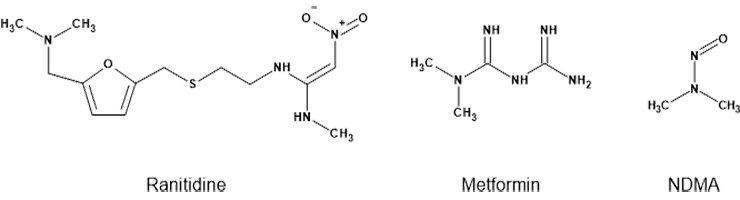

In September 2019 the FDA learned that the common heartburn products Zantac and Axid contained elevated levels of the carcinogen N-nitrosodimethylamine (NDMA). Ranitidine and nizatidine, the active ingredients in these medications, appeared to be the source of this impurity.

By April 1, 2020, the FDA asked that all medications containing ranitidine be withdrawn from the US market (1).

In December 2019 there were reports of metformin diabetes medicines containing NDMA. While initial tests of metformin by U.S. regulators were negative, further FDA testing revealed that some metformin extended-release medications contained elevated levels of NDMA (1). Recalls were issued in May 2020, followed by another round in 2022 (2).

The ultimate cause of this NDMA contamination was the same in both cases — degradation. When the FDA tested ranitidine, they found that the levels of NDMA would increase over time, even when stored at room temperature for only two weeks (1). The story with metformin was more complicated – an investigative study showed that NDMA did not form under normal storage conditions. However, it did form as a result of elevated temperatures experienced during manufacturing, especially if residual dimethylamine (DMA) was present (3).

These are some of the latest examples of toxic degradants causing serious concerns for patient health. While there is considerable debate about the appropriate safety standards for n-nitrosamines in medicines, it is clear that pharmaceutical development teams need to be vigilant about degradation issues. Regulators expect pharmaceutical companies to fully understand the stability of their drug substance and drug product.

Conducting degradation testing is challenging. Scientists must run many stability studies over a range of time intervals under a wide variety of environmental conditions. These experiments also need to be coordinated to keep pace with project milestones.

Unfortunately, data management is often an obstacle during forced degradation testing. Finding, consolidating, and handling data takes considerable time and attention, slowing overall progress. Scientists working in forced degradation and stability research need better data solutions designed to meet their research needs.

Data challenges in stability testing and forced degradation

Forced degradation research requires using many types of data. Consolidating this data is challenging due to compatibility issues and data silos. This leads to lost time and an increased risk of error.

An example of this information management challenge is seen when attempting to access information from a predicted degradation map. Software tools, such as Lhasa’s Zeneth, are used to generate a degradation map for the drug substance and drug product. This is used to anticipate likely degradants and identify compounds that may be toxic. Once the project progresses to development, scientists use these maps to interpret analytical data.

Ideally, researchers should be able to directly compare the predicted and experimental results, but performing this analysis is surprisingly challenging. Multiple files must be exported, assembled, and analyzed in a separate application before team members can interpret their data.

Forced degradation research also requires bringing together many types of analytical data to characterize complex materials. HPLC-MS, NMR, and optical data are used to dereplicate, identify, and quantify degradants formed during stress testing. Unfortunately, each data type uses a different file format, meaning scientists often need to piece together a patchwork of software to analyze their data, which is a barrier to effective decision-making.

Data handling challenges can also lead to project management issues. Forced degradation research involves specialized experiments, meaning only certain labs have the capacity to carry out this work. As a result, these tests are often spread across multiple locations and include contributions from contract organizations. While this approach is most efficient, it increases the complexity of the work for team leaders who must monitor the project’s overall progress. This can also lead to lost data or unnecessary repeat experiments.

To navigate these data challenges, pharmaceutical development researchers often rely on Excel to manage this information. Unfortunately, this creates its own set of issues.

Is Excel holding back your pharmaceutical development research?

Due to their flexibility and availability, Excel spreadsheets have become the default tool for gathering, analyzing, and visualizing scientific data assembled from various sources and abstracted to numerical and text inputs. Analytical results are exported as tables or .csv files, which are then reassembled into the spreadsheets used for managing pharmaceutical projects. However, Excel lacks many of the tools and functionality that scientists need to complete their research efficiently.

Some of the limitations include the following:

Lacks chemical intelligence: spreadsheets do not understand chemistry, meaning tools such as structure-based search are impossible. Structures, when included, are clunky images that are difficult to work with.

Abstracted analytical data: Excel cannot directly read analytical data. Experimental results must be exported as lists of peaks, making reprocessing the data impossible; and reducing spectra and chromatograms that scientists can intuitively understand into a collection of numbers.

Versioning issues: as described above, teams responsible for stability testing are often spread out. Shared Excel spreadsheets often encounter versioning issues, where information is lost or overwritten due to confusion over which file is most recent.

To keep these spreadsheets operational, many development teams must spend considerable time and resources to implement procedures, plug-ins, and workarounds. Clearly, Excel is insufficient for meeting pharmaceutical development needs. Researchers, including those involved in forced degradation testing, need a chemically intelligent science informatics platform built on the FAIR data principles to enable their work (4).

How Pfizer is addressing the forced degradation data problem

One company that has addressed its forced degradation data management challenge is Pfizer. Their data was spread across multiple systems, including Waters Empower, Excel spreadsheets, and electronic laboratory notebooks. They decided to implement Luminata, a CMC decision support tool, to consolidate this siloed information. “We required a digital solution for data management so that all data could be collated in one place,” said Dr. Hannah Davies, a principal research scientist at Pfizer.

This technology supported researchers across pharmaceutical development, including those working on forced degradation. “The power of Luminata is that all of this data can be visualized, searched, and filtered, all in one place,” Davies explained. “This facilitates rapid decision-making within CMC, across process development, forced degradation and stress testing, batch genealogy, excipient compatibility, and formulation development.”

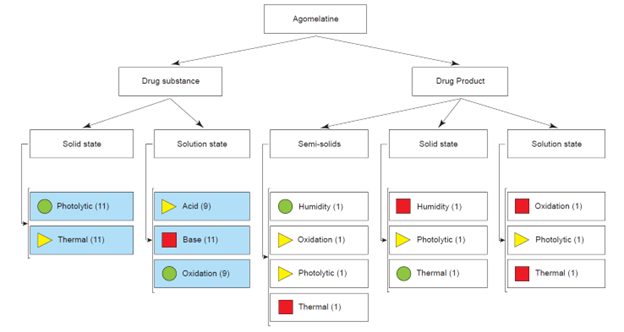

One tool within Luminata that was useful for forced degradation research was the dynamic project map. Forced degradation research requires reactions testing temperature, pH, photodegradation, and more. Given that these experiments are often carried out across multiple laboratories, some tests could be unnecessarily duplicated, representing lost time and money. Organizing results in one interface allowed a team leader to review the project’s status to ensure everything was progressing according to plan. Scientists could also access results for each completed experiment, allowing them to reference, review, or reprocess the analytical data.

Dynamic project map in Luminata interface, showing the progress of each set of experiments. Green circles indicate “complete,” yellow triangles indicate “in progress,” and red squares indicate “not started.” Credit: ACD/Labs

Improving your forced degradation data strategy

Pharmaceutical development scientists often spend more time managing their data than doing research. While no one is satisfied with the time and effort spent fighting with Excel, it can feel like there is no alternative. Fortunately, updating your forced degradation data management strategy is possible, but it is a commitment. Researchers must evaluate what information they need access to and then implement systems that support this consolidation. This often requires strategic thinking and collaboration across many functions within an organization.

However, upgrading your forced degradation informatics infrastructure offers considerable benefits, as seen in the case of Pfizer. Scientists can focus on their research rather than chasing down analytical data or fighting with spreadsheets. Not only does this mean more productive scientists, but it also means higher quality medicines and healthier patients.

Tell Us What You Think!