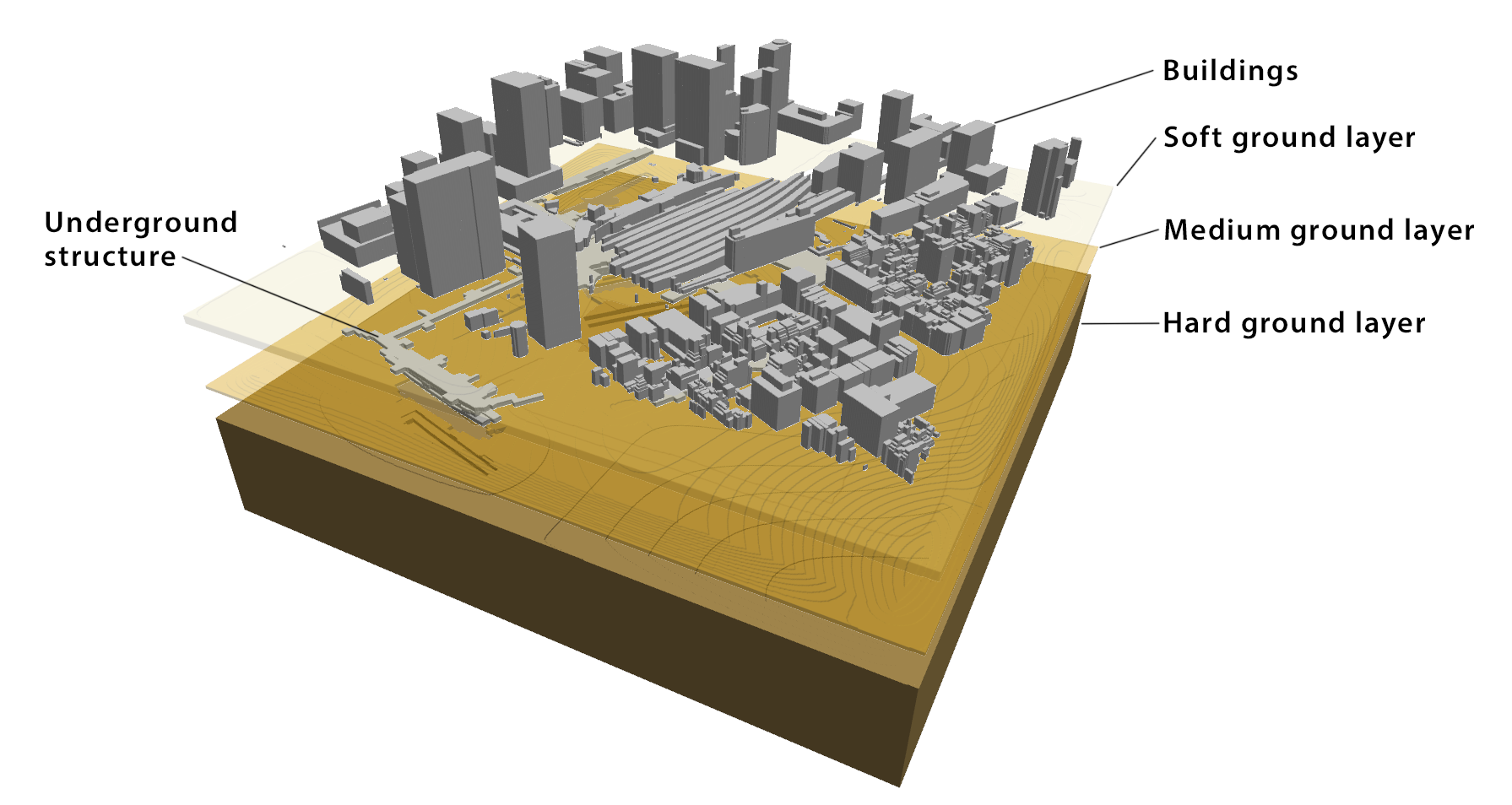

A city model of Tokyo Station and the surrounding area (1,024 m × 1,024 m × 370 m) with underground and building structures as well as two-layered ground. (Image Credit: University of Tokyo)

During a large earthquake, energy rips through the ground in the form of seismic waves that can wreak havoc on densely populated areas, causing destruction to property and loss of human life. The effects of earthquakes can be difficult to predict, and even the best modeling and simulation techniques have thus far been unable to capture some of these earthquakes’ more complex characteristics.

A team in the Earthquake Research Institute (ERI) at the University of Tokyo in Japan studies seismic waves to understand how to better manage cities at risk for these violent, complex disasters. Now, in a collaborative project with the US Department of Energy’s (DOE’s) Oak Ridge National Laboratory (ORNL) and the Swiss National Supercomputing Centre (CSCS), the team has simulated an earthquake wave—accelerated using artificial intelligence (AI) and a computational technique called transprecision computing—and included, for the first time, the shaking of the ground coupled with underground and aboveground building structures in the same simulation. Additionally, the simulation was done at super high resolution relative to typical earthquake simulations.

The team ran on the world’s most powerful supercomputer, the IBM AC922 Summit at the Oak Ridge Leadership Computing Facility (OLCF), a DOE Office of Science User Facility at ORNL. With Summit, the researchers achieved a fourfold speedup over their state-of-the-art SC14 Gordon Bell finalist code. The ACM Gordon Bell prize recognizes outstanding achievements in high-performance computing (HPC). Their new code, the iMplicit sOlver wiTH artificial intelligence and tRAnsprecision computing (MOTHRA), has again earned a finalist nomination for the prize.

The accomplishment has marked milestones on Summit even before the machine’s full acceptance. Whereas most deep-learning networks are used for data analysis, the team’s code is one of the first to leverage AI to accelerate an HPC problem involving simulation. The team also employed transprecision computing, which fully exploits Summit’s NVIDIA V100 GPUs by using lower-precision data types suitable for the GPU architecture.

Transprecision calculations decrease data transfer rates and accelerate computing by storing, moving, and computing only a fraction of the digits that double precision calculations—the current HPC standard—employ. Although mixed-precision calculations typically use single- and double-precision arithmetic, transprecision computing runs the gamut. Not only did the team members employ half-precision with single- and double-precision calculations, but they also used a custom data type to further reduce data transfer costs. The team used less precision in some calculations but was able to insert the results from those calculations into equations that employed greater precision techniques, providing them with the same accuracy of double precision in less time.

The project’s success demonstrates the viability of implementing these new approaches in other codes to increase their speed.

An interwoven system

Although current simulations can compute the properties of the homogenous, hard soil deep underground, scientists seek to couple the shaking of soft soil near the surface—a difficult problem on its own—with the urban structures both above and underground. Until now, no one had ever performed a simulation that fully coupled ground and city characteristics with super high resolution.

The Tokyo team used 3D data from the Geospatial Information Authority of Japan to simulate a seismic wave spreading through Tokyo Station in Japan. The simulation included hard soil, soft soil, underground malls, and subway systems in addition to the complicated buildings above ground. The team based the wave on seismic activity that occurred during an earthquake in Kobe, Japan, in 1995.

Scientists can compute problems like this faster by using a preconditioner, a tool that helps reduce the computational cost involved in a simulation. If the preconditioner can obtain a good guess of the solution beforehand, the computational cost required to get the exact solution is decreased. Typically, scientists will build preconditioners through mathematical approximations, but Ichimura’s team sought to use AI in the form of an artificial neural network (ANN) for this task.

ANNs are computer systems that loosely mimic the structure of the human brain and have been employed for task-oriented problems such as speech, text, and image recognition.

ANNs can solve data analytics problems with an adequate degree of accuracy most of the time, but for physics-based simulations, this accuracy is not always enough. For this reason, the Tokyo team trained an ANN to explicitly classify the mathematical structure behind the physics in the simulations, feeding the ANN information from smaller but similar earthquake simulations. The ANN provided the team with information that helped reduce some of the unknown properties of the problem, resulting in the construction of a highly robust preconditioner with less computational cost.

The team ran the simulation on 24,576 of Summit’s GPUs using the MOTHRA code, an enhanced version of the team’s previous code that was also a Gordon Bell finalist in 2014. The ANN reduced the arithmetic count in MOTHRA by 5.56-fold. The method could have applications in other city-scale natural disaster problems with similar complexity, Ichimura said, because of this new AI capability.

Integrating AI and HPC is not always easy because of the accuracy needed for the majority of scientific simulations. But finding ways to allow HPC to take advantage of AI can help computational scientists address problems that were previously too complex to solve.

“These techniques, along with the capabilities of supercomputers like Summit, allow us to tackle more difficult problems,” said Kohei Fujita, assistant professor at ERI.

MOTHRA: Taking flight on Summit

The team spent about 2 months scaling MOTHRA up and running on Summit, maneuvering around a challenging acceptance schedule earlier this year. The machine is expected to be fully accepted later this fall, but scientists have already begun to make progress on it.

In addition to running on Summit, the team also scaled MOTHRA to the Piz Daint supercomputer at CSCS and the K computer at the RIKEN Center for Computational Science and achieved great scalability. The code scaled to the full Piz Daint supercomputer and scaled to more than 49,000 of the K supercomputer’s 80,000 nodes, demonstrating its portability.

Of the three systems, the team conducted the largest simulation on Summit, using 24,576 of its GPUs, leading to a 25.3-fold acceleration in comparison with previous methods that did not employ AI or transprecision computing techniques.

The team members are continuing to improve MOTHRA and their other relevant codes so the Japanese government can use them to estimate disasters in Japan. This will provide opportunities for more informed evaluation and decision making by lawmakers and stakeholders. The team also plans to collaborate with seismology professors in the US to apply MOTHRA to faults in California.

“We are happy to have had such a successful result on Summit in this collaboration with the US and Europe,” Ichimura said. “This is not only very good news for Summit, but it is also good news for the computer science community as a whole, the K computer, Piz Daint, and future supercomputers, as well. These resources might help us solve problems we haven’t ever been able to think about—much less simulate—until now.”