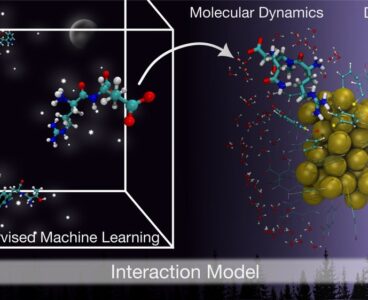

Researchers from the Nanoscience Center at the University of Jyväskylä, Finland, have developed a computational model that could expedite the use of nanomaterials in biomedical applications. Their machine learning framework is capable of predicting how proteins interact with ligand-stabilized gold nanoclusters, materials widely used in bioimaging, biosensing and targeted drug delivery. Gold nanoclusters are used…

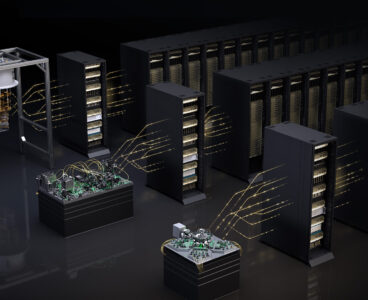

Sandia unveils Spectra, a reconfigurable supercomputer for nuclear stockpile simulations

Sandia National Laboratory and NextSilicon, a technology company, collaborated to create a supercomputer designed to prioritize tasks in real time, Sandia announced on Monday. The computer, called Spectra, could alter how the nation conducts high-stakes simulations for its nuclear deterrence mission. In other words, while it won’t top the TOP500 list of supercomputers, the prototype…

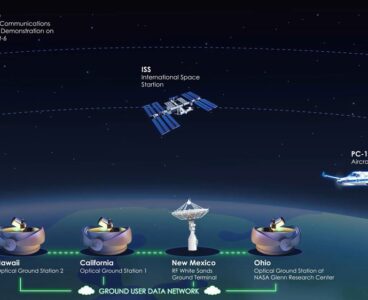

NASA R&D 100 Winner enables high-speed data transfer from space

High-Rate Delay-Tolerant Networking (HDTN) is software for streaming and networking communications in space. The software has the potential to enable a solar system internet, allowing space exploration teams to receive data from rovers and other space vehicles and to maintain connections between spacecraft and Earth. The software can transfer data up to 10 times faster…

Inside the connected lab: Elemental Machines’ CEO on taming alarms, drift and data chaos

Every lab has a Friday-night freezer story. The alarm chirps, people scramble, and someone winds up babysitting samples with a clipboard that was never meant to be a forensic record. As instruments fill the lab and teams shift from wielding pipettes to orchestrating cloud workflows and collaborating with AI agents, the margin for ad hoc…

Lab automation is “vaporizing”: Why the hottest innovation is invisible

[Image from Adobe Stock] Why you should read this report: Lab automation looks hot, but the usual indicators are quiet: patents are flat, vendors report uneven demand, and standard market metrics barely move. This report shows what those signals miss—where recent AI-drug-discovery capital actually landed, why “Lab Automation Engineer” roles increasingly require Python and APIs…

Maryland set for first subsea internet cable: AWS’s 320+ Tbps “Fastnet” to Ireland

Maryland is getting its first undersea internet cable, and it’s a monster. Amazon Web Services announced plans for “Fastnet,” a dedicated fiber optic system linking the state’s Eastern Shore to Ireland with enough raw power to stream 12.5 million HD films simultaneously. The project, set to be operational in 2028, represents AWS’s bet that customer…

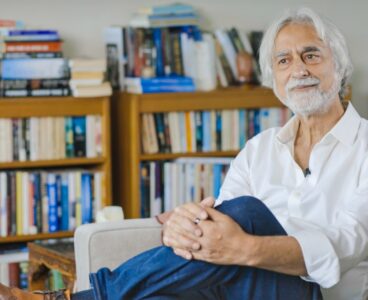

Google on how AI will extend researchers

Asked whether AI will lessen the need for researchers, Google’s head of Research Yossi Matias gave a clear answer. “The only scenario where you would need fewer researchers is if we assume we’ve answered almost all the major questions. I don’t think anyone believes that,” he said at Google’s flagship research conference in Mountain View.…

Nvidia and Alice & Bob unveil NVQLink to tighten GPU–quantum integration

Nvidia introduced NVQLink at its GTC Washington event, pitching the open system architecture as the missing low-latency bridge between quantum processors and GPU supercomputers. Paris- and Boston-based Alice & Bob said it is collaborating on the rollout, aligning its cat-qubit hardware and software with the new interconnect for real-time decoding, calibration and control. Alice &…

Kythera Labs’ Wayfinder remasters incomplete medical data for AI analysis

Healthcare data is often incomplete and inconsistent, limiting efforts to improve patient outcomes and operational efficiency. A 2021 report from Sage Growth found that only 20% of healthcare organizations fully trust their data. Because records follow patients across providers with shifting identifiers and coding schemes, the same encounter often appears multiple times or partially, breaking…

Could AI smell cancer? Science says yes

In 1982, Joy Milne detected her husband’s Parkinson’s disease with her heightened sense of smell. She wouldn’t realize the source of the scent until after her husband was diagnosed with Parkinson’s over a decade later. The couple attended a support group, and Milne smelled the disease on almost every person there. The Milnes’ case was…

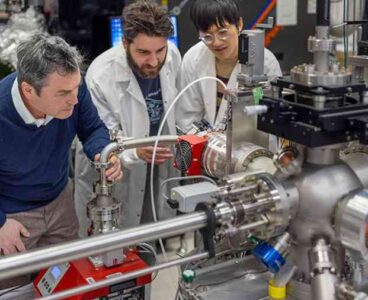

ORNL named on 20 R&D 100 Awards, including carbon-capture and AM tools

Oak Ridge National Laboratory was named on 20 of the 2025 R&D 100 Awards, 17 as lead developer and three as co-developer. The showing sets a new record for the lab, accounting for about one-fifth of all winners. Since the 1980s, ORNL has won more than 260 R&D 100 Awards Our sister publication engineering.com recently…

Adviser Labs raises $1M to simplify cloud HPC for in AI and scientific computing

Cloud GPUs are no longer uniformly scarce, though high-end models like Nvidia’s H100 and H200 can still be tight in some regions and at certain providers. A more consistent bottleneck is operational: teams with working models or simulations lose hours to identity management, schedulers, images and cost guardrails. For labs and R&D groups, that means…

Revealing the 2025 R&D 100 Awards Winners

The official 2025 R&D 100 Awards have been announced by R&D World. This worldwide science and innovation competition, now in its 63rd year, received entries from organizations around the world. This year’s judging panel included industry professionals from across the globe who evaluated breakthrough innovations in technology and science. The Winners are listed below by…

R&D World announces 2025 R&D 100 Professional Award Winners

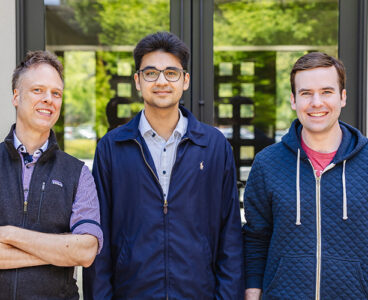

R&D World has announced the winners of the 2025 R&D 100 Professional Awards. The honorees were selected by a panel of 54 prestigious industry experts from around the globe. The list of 2025 winners follows, along with highlights from their nomination letters. These winners will be formally awarded at the R&D 100 Awards Banquet at…

Elsevier’s 121 million data point database is now searchable by AI

Elsevier, founded in 1880, is going all in on AI and data. In addition to publishing, Elsevier now offers several databases, learning resources and AI tools all aimed at supporting researchers. The latest release in this vein is a new AI-powered search engine for its chemistry database, Reaxys, which represents a fresh take on its…

6 R&D advances this week: a quantum computer in space and a record-breaking lightning bolt

This week in R&D: the first quantum computer in space is now orbiting the Earth; a potential new treatment for Alzheimer’s, thanks to cancer drugs; a startup is breaking ground on their first fusion power plant, they say they are on track to deliver fusion energy by 2030; Google DeepMind announced their AI Earth mapping…

Phesi CEO on why phase 3 clinical trials fail

Phase 3 clinical trials are lengthy and expensive processes that could result in hundreds of millions of dollars in losses if they fail, enough to tank a mid-sized or small company. Direct costs alone tend to be in the tens of millions of dollars, yet failures are common. A study by researchers at MIT found…

Quantum computing edges closer to biotech reality in Moderna-IBM pact

In 2022, Moderna brought in approximately $19.26 billion in revenue, largely thanks to its groundbreaking Spikevax COVID-19 vaccine. In January 2025, the company is projecting revenue of $1.5 billion to $2.5 billion. To reverse this downturn, the company is pushing to broaden mRNA’s applications into cancer, rare diseases and other areas, but that requires cracking…

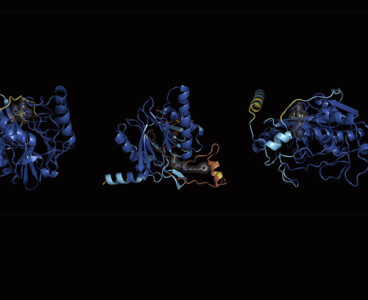

SandboxAQ’s SAIR dataset turns 5.2 M protein‑ligand structures into ground‑truth fuel for AI

SandboxAQ, the Alphabet spinoff whose name reflects its work at the intersection of AI and quantum techniques, thinks testing drugs on animals is already passé. “It’s not so much that we’re going to somehow move a mouse model into computers,” said Nadia Harhen, general manager of AI simulation at the company. “It’s that we hope…

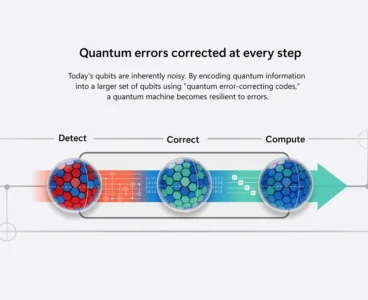

Microsoft’s 4D geometric codes slash quantum errors by 1,000x

Microsoft Quantum has unveiled a family of new four-dimensional (4D) geometric codes, that can reduce the error rates of physical qubits by orders of magnitude to reach the level required for reliable quantum circuits. Available in the Microsoft Quantum compute platform, the error correction codes deliver a 1,000-fold reduction in quantum error rates (from 10⁻³…

How IBM’s quantum architecture could design materials physics can’t yet explain

Big Blue is making a bold claim. “We feel at IBM, we’ve cracked the code to quantum error correction, and it’s our plan to build the first large-scale fault-tolerant quantum computer, which we call IBM Quantum Starling, in 2029,” Jay Gambetta, vice president of IBM Quantum, announced at a recent press conference. From trial and…

Berkeley Lab’s Dell and NVIDIA-powered ‘Doudna’ supercomputer to enable real-time data access for 11,000 researchers

Designed to help 11,000 researchers “think bigger and discover sooner,” Lawrence Berkeley National Laboratory’s Doudna supercomputer, launching in 2026, will be central to an integrated research fabric. This Dell and NVIDIA-powered system will ingest data from telescopes, fusion tokamaks, and genome sequencers via the Energy Sciences Network (ESnet). It will enable near real-time analysis and…

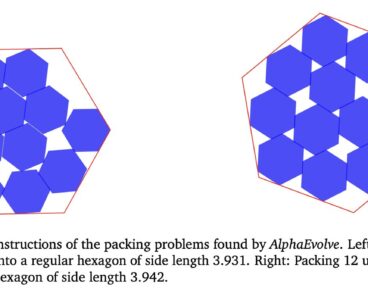

Why Google DeepMind’s AlphaEvolve incremental math and server wins could signal future R&D payoffs

Google’s data centers have gained 0.7% more operational capacity thanks to AlphaEvolve, an AI agent that iteratively refines code for optimal performance. This same system also advanced a long-standing geometry challenge by finding a new way to pack 11 identical hexagons. Those are not the only advances. AlphaEvolve also discovered superior algorithms for matrix multiplication…

PsiQuantum’s $6B valuation lures Nvidia into quantum hardware

First came “Quantum Day,” Nvidia’s March 20 showcase at GTC put CEO Jensen Huang side-by-side with quantum players he had dismissed on January 7 at CES. Now, according to The Information and Reuters, Huang is in talks to invest in photonic-qubit upstart PsiQuantum, underscoring potentially changed thinking after quipping at CES that “very useful” quantum machines…

Alice & Bob stakes €46 million on Paris quantum fab, taps QM and Bluefors

Cat-qubit developer Alice & Bob plans to spend about $50 million on a 4,000-square-meter quantum lab in Paris, pairing Israeli quantum control system firm Quantum Machines control gear with 20 dilution refrigerators from Bluefors, a cryogenic systems maker, to speed its push toward fault-tolerant quantum chips and a 100-logical-qubit system by 2030. Alice & Bob…