Deep Learning Software Speeds Up Drug Discovery

Next Generation Photonic Memory Devices Are Light-written, Ultrafast and Energy Efficient

Light is the most energy-efficient way of moving information. Yet, light shows one big limitation: it is difficult to store. As a matter of fact, data centers rely primarily on magnetic hard drives. However, in these hard drives, information is transferred at an energy cost that is nowadays exploding. Researchers of the Institute of Photonic…

NCSA Brings Dark Energy Survey Data to Science Community into 2021

After scanning in depth about a quarter of the southern skies for six years and cataloguing hundreds of millions of distant galaxies, the Dark Energy Survey (DES) finished taking data January 9, 2019. The National Center for Supercomputing Applications (NCSA) at the University of Illinois will continue refining and serving this data for use by scientists into 2021. The…

Creating a ‘Virtual Seismologist’

Understanding earthquakes is a challenging problem—not only because they are potentially dangerous but also because they are complicated phenomena that are difficult to study. Interpreting the massive, often convoluted data sets that are recorded by earthquake monitoring networks is a herculean task for seismologists, but the effort involved in producing accurate analyses could significantly improve…

Lilly Expands Deal to Analyze Patient Data From Smartphones and Connected Sensors

Big Data Used to Predict the Future

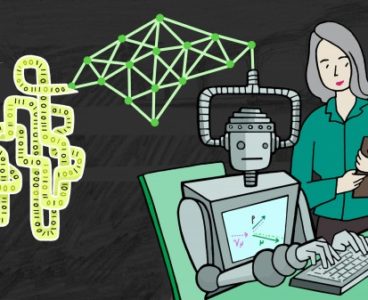

AI Capable of Outlining in a Single Chart Information From Thousands of Scientific Papers

NIMS and the Toyota Technological Institute at Chicago have jointly developed a Computer-Aided Material Design (CAMaD) system capable of extracting information related to fabrication processes and material structures and properties–factors vital to material design–and organizing and visualizing the relationship between them. The use of this system enables information from thousands of scientific and technical articles…

Machine Learning Yields Fresh Insights Into Pressure Injury Risks

Researchers Teach ‘Machines’ to Detect Medicare Fraud

Quantum Computers Tackle Big Data With Machine Learning

Every two seconds, sensors measuring the United States’ electrical grid collect 3 petabytes of data – the equivalent of 3 million gigabytes. Data analysis on that scale is a challenge when crucial information is stored in an inaccessible database. But researchers at Purdue University are working on a solution, combining quantum algorithms with classical computing on small-scale…

Chemistry Professor Awarded NSF Grant to Improve Data Science Framework

Dr. Stuart Chalk, a University of North Florida chemistry professor, has been awarded a grant from the National Science Foundation to test and improve upon his data science framework, SciData, which will help make the integration of scientific data more efficient for researchers. The $600,000 NSF grant will focus on linking chemical data to health,…

Understanding Deep-Sea Images With Artificial Intelligence

The evaluation of very large amounts of data is becoming increasingly relevant in ocean research. Diving robots or autonomous underwater vehicles, which carry out measurements independently in the deep sea, can now record large quantities of high-resolution images. To evaluate these images scientifically in a sustainable manner, a number of prerequisites have to be fulfilled…

New Institute to Address Massive Data Demands from Upgraded LHC

Interpretation of Material Spectra Can Be Data-driven Using Machine Learning

Spectroscopy techniques are commonly used in materials research because they enable identification of materials from their unique spectral features. These features are correlated with specific material properties, such as their atomic configurations and chemical bond structures. Modern spectroscopy methods have enabled rapid generation of enormous numbers of material spectra, but it is necessary to interpret…

Topology, Physics and Machine Learning Take on Climate Research Data Challenges

Two PhD students who first came to Lawrence Berkeley National Laboratory (Berkeley Lab) as summer interns in 2016 are spending six months a year at the lab through 2020 developing new data analytics tools that could dramatically impact climate research and other large-scale science data projects. Grzegorz Muszynski is a PhD student at the University…

Scientists Harness the Power of Deep Learning to Better Understand the Universe

A Big Data Center collaboration between computational scientists at Lawrence Berkeley National Laboratory’s (Berkeley Lab) National Energy Research Scientific Computing Center (NERSC) and engineers at Intel and Cray has yielded another first in the quest to apply deep learning to data-intensive science: CosmoFlow, the first large-scale science application to use the TensorFlow framework on a CPU-based high…

Particle Physicists Team Up With AI to Solve Toughest Science Problems

Experiments at the Large Hadron Collider (LHC), the world’s largest particle accelerator at the European particle physics lab CERN, produce about a million gigabytes of data every second. Even after reduction and compression, the data amassed in just one hour is similar to the data volume Facebook collects in an entire year – too much…

New NSF Awards Support the Creation of Bio-Based Semiconductors

To address a worldwide need for data storage that far outstrips today’s capabilities, federal agencies and a technology research consortium are investing $12 million in new research through the Semiconductor Synthetic Biology for Information Processing and Storage Technologies (SemiSynBio) program. The goal is to create storage systems that integrate synthetic biology with semiconductor technology. SemiSynBio,…

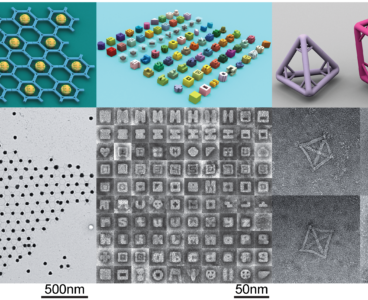

Using Light for Next-generation Data Storage

Tiny, nano-sized crystals of salt encoded with data using light from a laser could be the next data storage technology of choice, following research by Australian scientists. The researchers from the University of South Australia and University of Adelaide, in collaboration with the University of New South Wales, have demonstrated a novel and energy-efficient approach…

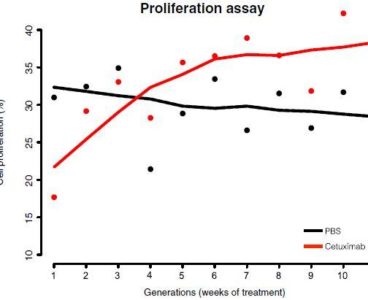

Computer Algorithm Maps Cancer Resistance to Drugs, Therapy

New methods of studying the evolution of treatment resistance in head and neck cancer are being developed by researchers at the Johns Hopkins Kimmel Cancer Center. The scientists wanted to examine how cancers acquire resistance to treatment over time and whether those changes could be modeled computationally to determine patient-specific timelines of resistance. The research…

‘Breakthrough’ Algorithm Exponentially Faster Than Any Previous One

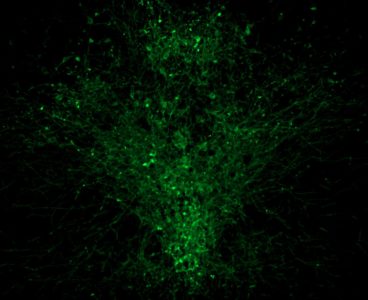

Computational Model Analysis Reveals Serotonin Speeds Learning

A new computational-model designed by researchers at UCL based on data from the Champalimaud Centre for the Unknown reveals that serotonin, one of the most widespread chemicals in the brain, can speed up learning. Serotonin is thought to mediate communications between neural cells and play an essential role in functional, and dysfunctional, cognition. For a…

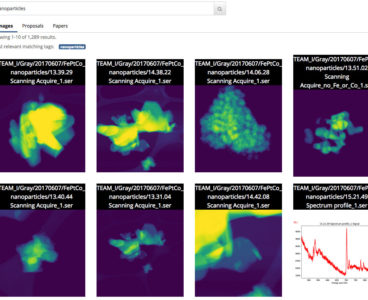

Researchers Use Machine Learning to Search Science Data

As scientific datasets increase in both size and complexity, the ability to label, filter and search this deluge of information has become a laborious, time-consuming and sometimes impossible task, without the help of automated tools. With this in mind, a team of researchers from Lawrence Berkeley National Laboratory (Berkeley Lab) and UC Berkeley are developing…